The fourth book of rationality

This is part 4 of 6 in my series of summaries. See this post for an introduction.

Part IV

Mere Reality

Interlude: A Technical Explanation of Technical

Explanation

Next: The fifth (and penultimate) book of rationality

Part IV

Mere Reality

M

|

oving on from evolutionary and cognitive

models, this part explores the nature of mind and the character of physical

law. What kind of world do we live in, and what is our place in that world? We

will look at past scientific mysteries, parsimony, and the role of science in

individual rationality.

Previous sections have

explained patterns in human reasoning and behavior through the lenses of

mathematics, physics and biology, but haven’t said much about humanity’s place

in nature or the natural world in its own right. Humans are not only

goal-oriented systems, but also physical systems. We are built out of inhuman

parts, like atoms. We can relate the human world to the world revealed by

physics through reductionism: in

particular, by applying it to present-day controversies in science and

philosophy, like the debates on consciousness and quantum physics.

The philosopher Thomas Nagel

famously asked whether anyone can ever know what it’s like to be a bat – what

it would subjectively feel like. Even if we could perfectly model bat neurology

and predict bat behavior, how could we be certain that the bat isn’t just an

unconscious automaton? David Chalmers argues that third-person cognitive models

can never fully capture first-person consciousness, and that consciousness is a

“further fact” not fully explainable by the physical facts. But can this

argument stand up to a technical

understanding of how explanation and belief work?

The other topic, quantum

mechanics, is our best mathematical model of the universe to date. The

Schrödinger equation deterministically captures everything there is to know

about the dynamics of physical systems, including “superpositions”… as long as

we aren’t looking. Whenever we make observations, the superpositions seem to

vanish without a trace, and we need to use Born’s probabilistic rule to make

predictions. What all of this even means

has produced many views on the nature of quantum mechanics. Yudkowsky uses this

scientific controversy as a proving ground.

15

|

Lawful Truth

|

This

chapter introduces the basic links between physics and human cognition.

|

In the late 18th century,

Antoine-Laurent de Lavoisier discovered that people, like fire, consume fuel

and oxygen and produce heat and carbon dioxide. Today, phosphorous (part of ATP,

crucial for metabolism) is found in matches. We may use different surface-level

rules for different phenomena, but the underlying laws that govern nature are

not so divided. Due to this, you can’t change just one thing in the world and

expect the rest to continue working as before. For example, if matches didn’t

light, we couldn’t breathe. Reality is laced together a lot more tightly than

we might like to believe.

Newton’s unification of falling apples with the

course of planets gave rise to the idea of universal laws with no exceptions.

Even though our models of fundamental

laws may not last, reality itself is (and was) always constant and rigid. To

think the universe itself is whimsical is to mix up the map and the territory. In

our everyday lives we are accustomed to rules with exceptions, but apparent

violations of the basic laws of the universe exist only in our models, not in

reality. Remember: since the beginning, not one unusual thing has ever

happened.

Just as physics came before the physicist,

mathematics came before the mathematician in a structured universe. The beauty

of math is discovering new

properties that you never built into the mathematical objects you created (like

building a toaster and realizing that your invention also, for some unexplained

reason, acts as a rocket jetpack and MP3 player). Don’t prematurely end the search

for mathematical beauty by trying to impose order; sometimes you have to dig a

little deeper. Finding hidden beauty isn’t certain, but it has happened frequently enough

throughout history to be better than unfounded faith. Consider the sequence {1,

4, 9, 16, 25, …}. Can you predict the next item in the sequence? You could take

the first differences to get {4-1, 9-4, 16-9, …} = {3, 5, 7, 9,…} and then

second differences to get {5-3, 7-5, 9-7, …} = {2, 2, 2, …}.

If you predict the next second

difference is also 2, then the next first difference must be 11, hence the next

item in the original sequence must be 36. But perhaps the “messy real world”

lacks the order of these abstract mathematical objects? It may seem messy for

three reasons: first, we may not actually know the rules due to empirical uncertainty; second, even if

we do know all of the math, we may not have enough computing power to do the

full calculation due to logical

uncertainty; and third, even if we could compute it, we still don’t know

where in the mathematical universe we are living due to indexical uncertainty. We are not omniscient. However, uncertainty

exists in the map, not in the territory. Our best guess is that the “real”

world is perfectly regular math. In many cases we’ve already found the

underlying simple and stable level – which we name “physics”.

Bayesian probability theory is attractive

because it comprises unique and coherent laws,

as opposed to the ad-hoc tools of frequentist statisticians. Bayesians expect

probability theory and rationality to be self-consistent, neat, and even

beautiful, which is why they think Cox’s

theorems are so important. Rationality is fundamentally math, and using a

useful approximation to a law (in the map) doesn’t change the law (in the

territory). Any approximation to the optimal answer will be explainable in

terms of Bayesian probability theory, and just because you may not know the

explanation does not mean no explanation exists. Instead of thinking in terms

of tricks to throw at particular problems, we should think in terms of the

extent to which we approximate the optimal Bayesian theorems.

There is a proverb that “outside the

laboratory, scientists are no wiser than anyone else”. If there’s any truth to

this, we should be alarmed. Rationality should apply in everyday life, not just

in the laboratory. We should be disturbed when scientists believe wacky ideas

(e.g. religion), because it means they probably don’t truly understand why the

scientific rules work on a gut level, but blindly practice scientific rituals

like a social convention. Those who understand the map-territory distinction

and see that reality is a single unified process will integrate their knowledge.

The Second Law of Thermodynamics says that the

phase space volume of a closed system is conserved over time. The link between

heat and probability makes this Bayesian. Any rational mind does “work” in the

thermodynamic sense, not just the sense of mental effort. Like a car engine or

refrigerator, your brain (an engine of

cognition) must interact thermodynamically with the physical world to form

accurate beliefs about something. In other words, you really do have to observe

it. True knowledge of the unseen would violate the laws of physics.

People tend to think that teachers tell them

things that are certain and that this is like an authoritative order that must

be obeyed, whereas a probabilistic belief is like a mere suggestion. Probabilities

are not logical certainties, but the governing laws of probability are harder

than steel. It is still mandatory to expect a smashed egg not to spontaneously

reform. It is still mandatory to expect a glass of boiling-hot water to burn

your hand rather than cool it, even if you don’t know that with certainty. So

beware believing without evidence and saying “no one can prove me wrong!”

Otherwise you’ll end up building a vast edifice of justification and confuse

yourself just enough to conceal the magical step, similar to people designing

“perpetual motion machines”.

Philosophers have spilled ink over the nature

of words and various cognitive phenomena. But it was Bayes all along! Mutual

information between a mind and its environment is physical negentropy, which is

Bayesian evidence, implying that any useful cognitive process must to some

extent be in harmony with Bayes-structure. For a mind that arrives at true

beliefs or better-than-random beliefs, there must be at least one process with

a sort-of Bayesian structure somewhere, or it couldn’t possibly work. The quest

for the hidden Bayes can be exciting, because Bayes-structure can be buried

under all kinds of disguises.

›

16

|

Reductionism 101

|

This

chapter deals with the project of scientifically explaining phenomena.

|

A philosophical argument that free will does or

does not exist is different from the question, “what cognitive algorithm, as

felt from the inside, drives the intuitions about the debate?” Dissolve the

question by explaining how the

confusion arises. Good philosophy should not respond to a question like “if a

tree falls in a forest but no one hears it, does it make a sound?” by picking

and defending a position (“Yes!” or “No!”), but by deconstructing the human

algorithm to the point where there is no feeling of a question left. What goes

on inside the head of a human who thinks they have free will?

Confusion exists in the map, not in the

territory. Questions that seem unanswerable, where you cannot imagine what an

answer would look like, mark places where your mind runs skew to reality. Where

the mind cuts against reality’s grain, it generates questions like “do we have

free will?” or “why does anything exist at all?” Bad things happen when people

try to answer them: they inevitably generate a Mysterious Answer. These wrong questions must be dissolved by

understanding the cognitive algorithms causing the feeling of a question.

When facing a wrong question, don’t ask “why is

X the case?” but instead ask “why do I

think X is the case?” and trace back the causal history of your belief. You

should be able to explain the steps in terms of smaller, non-confusing

elements. For example, rather than asking “why do I have free will?” try asking

“why do I think I have free will?” This latter question is guaranteed to have a

real answer whether or not there is any such thing as free will, because you

can explain it in terms of psychological events.

Science fiction artists seem to think that

sexiness is an inherent property of a woman, such that an alien invader would

find her attractive despite having a different mind and different evolutionary

history. Ancient magazine covers depicted “bug-eyed monsters” carrying off girls

in torn dresses.

This is a case of the Mind Projection Fallacy, coined by E.T.

Jaynes. It is a general error to project your own mind’s properties into the

external world. Other examples include Kant’s declaration that space by its

very nature is flat, and Hume’s definition of a priori ideas as those “discoverable by the mere operation of

thought, without dependence on what is anywhere existent in the universe”.

Probabilities express states of partial

information; they are not inherent

properties of things. It is only agents who can be uncertain. Ignorance is in

the mind, and a blank map does not correspond to a blank territory. And via

Bayes’s Theorem, learning different items of evidence can lead you to different

states of partial knowledge (unsurprisingly). There is no “real probability”

that a flipped coin will come up heads; the outcome-weighting you assign to it

depends on the information that you have about the coin. The coin itself has no

mind and doesn’t assign a probability to anything.

The morning star and evening star are both

Venus, but the quotation “the morning star” is not substitutable for “the evening star”. You have to distinguish

beliefs/concepts from things, and remember that truth compares beliefs to

reality, but reality is real regardless. (Reality itself does not need to be

compared to any beliefs in order to be real.) If you don’t make a clear enough

distinction between your beliefs about the world, and the world itself, it is

very easy to derive wrong conclusions. As Alfred Tarski said: “snow is white”

is true if and only if snow is white.

Confusing belief with reality is easier when

using qualitative reasoning, which leads to mistakes like thinking “different

societies have different truths” (no, they have different beliefs). Instead of a qualitative binary belief or disbelief, you

should use quantitative probability distributions and degrees of accuracy

(measured in log base 2 bits). For example, if you assign a 70% probability to

the sentence “snow is white” being true, and if snow is white, then your

probability assignment is more accurate than it would have been if you had

assigned a 60% chance; in fact, it will score log2(0.7) = -0.51

bits. For meta-beliefs (i.e. beliefs about what you believe), you may assign

credence close to 1, since you may be less uncertain about your uncertainty

than you are about the territory. This way you can avoid mixing up beliefs,

accuracy, and reality – which are all different things.

Reality itself is not “weird” or “surprising”.

If your intuitions are shocked by the facts, then those are poor intuitions

(i.e. models) and they should be updated or discarded. People think that

quantum physics is weird, yet they have the bizarre idea that reality ought to

consist of little billiard balls bopping around, when in fact reality is a

perfectly normal cloud of complex amplitude in configuration space. If you find

this “weird”, that’s your problem,

not reality’s problem, and you’re the

weird one who needs to change. Reality has been around since long before you

showed up. There are no surprising facts, only models that are surprised by

facts.

Yudkowsky gives the example of how he used to

browse random websites when he couldn’t work, and he thought that he couldn’t

predict when he would become able to work again. But if you see your

hour-by-hour work cycle as chaotic or unpredictable, your productivity may not

actually be unpredictable; you may just be committing the Mind Projection

Fallacy – i.e. the problem being your own stupidity with respect to predicting

it. Inverted stupidity looks like chaos, and we often fail to think of

ourselves; we just see a chaotic feature of the environment. Hence we miss

opportunities to improve.

Reductionism is disbelief in the mind projection fallacy

that the higher levels of simplified multilevel models exist in the territory.

Only the fundamental laws of physics

are “real”, but for convenience we use different representations at different

levels or scales of reality. For example, we use different computer models for

the aerodynamics of a 747 and collisions in the Relativistic Heavy Ion Collider

(RHIC), but they obey the same fundamental Special Relativity, quantum

mechanics and chromodynamics. We build models

of the universe that have many different levels of description, but so far

as anyone has been able to determine, the universe

itself has only the single level of fundamental physics (reality doesn’t

explicitly compute protons, only quarks). The scale of a map is not a fact

about the territory; it’s a fact about the map.

Poets like John Keats have lamented that “the

mere touch of cold philosophy” has destroyed haunts in the air, gnomes in the

mine, and rainbows. But one of these things is not like the others. There is a

difference between explaining

something (e.g. rainbows) and explaining

it away (e.g. gnomes and haunts). The former, the rainbow, is still there.

The latter has been shown to be an error in the map, since there never were

gnomes in the mine! When you don’t distinguish between the multi-level map and

the mono-level territory, then when someone tries to explain to you that the

rainbow is not a fundamental thing in physics, acceptance of this will feel

like erasing rainbows from your multi-level map, which feels like erasing

rainbows from the world. But when physicists say “there are no fundamental rainbows”, it does not mean

“there are no rainbows”.

It is fake

reductionism to profess that something has been explained by Science,

without seeing how it is reducible. Imagine a dour-faced philosopher, who isn’t

able to see where the rainbow comes

from, telling you that “there’s nothing special about the rainbow, scientists

have explained it away, just something to do with raindrops or whatever,

nothing to be excited about.” The anti-reductionists experience “reduction” in

terms of being told that the password is “Science”, with the effect of moving

rainbows to a different literary genre (one

they’ve been taught to regard as boring). Genuine knowledge and understanding

lets you do things like play around with prisms and make your own rainbows with

water sprays. Scientific reductionism does not have to lead to existential

emptiness.

Poets write about Jupiter the god, but not

Jupiter the spinning sphere of ammonia and methane. Equations of physics aren’t

about strong raw emotions. Classic great stories (like those told about Jupiter

the god) touch our emotions, but why should Jupiter be human when we are humans? It’s not necessary for

Jupiter to think and feel in order for us to tell stories, because we can

always write stories with humans as their protagonists. We don’t have to keep

telling stories about Jupiter. That being said, we could do with more diverse

poetry and original stories. The Great Stories are old!

›

17

|

Joy in the Merely Real

|

This

chapter touches on the emotional, personal significance of the scientific

world-view.

|

Keats wrote in his poem Lamia that rainbows go in “the dull catalogue of common things”. Anything

real is, in principle, scientifically explicable. Nothing that actually exists

is inherently mysterious. But being unmagical and knowable doesn’t make

something not worth caring about! We have to take joy in the “merely” real and

mundane, or else our lives will always be empty. Don’t worry if quantum physics

turns out to be normal; if you can’t take joy in things that turn out to be

explicable, you’re going to set yourself up for eternal disappointment – a life

of irresolvable existential ennui.

Solving a mystery can feel euphoric –

especially if you discover the answer to a problem that nobody else has

answered. But if you personally don’t know, why should it matter if someone

else has the answer? If you don’t understand a puzzle, there’s a mystery, and

you should still take joy in discovering the solution. We shouldn’t base our

joy on the fact that nobody else has done it before. Stop worrying about what

other people know, and think of how many things you don’t know! Rationalists shouldn’t have less fun.

Why talk about joy in the merely real when

discussing reductionism? One reason is to leave a line of retreat; another is

to improve your own abilities as a rationalist by learning to accomplish things

in the real world rather than in a fantasy. Getting close to the truth requires

binding yourself to reality. Don’t invest your emotional energy in magic (or

lotteries), but redirect it into the universe. This should not create

existential anguish, because that which the truth nourishes should thrive. Emotions

that cannot be destroyed by the truth are not irrational. Understanding the

rainbow does not subtract its beauty; it adds

the beauty of physics.

If people don’t have a scientific attitude in

this universe, why would they become powerful sorcerers in a fantasy world if

magic were “merely real”? Magic, like UFOs, gets much of its charm from the

fact that it doesn’t actually exist. If dragons were real, they wouldn’t be

exciting, because people don’t take joy in the merely real. If we ever create

dragons or find aliens, people would treat them like zebras – most people

wouldn’t bother to pay attention, while some scientists would get oddly excited

about them. If you’re going to achieve greatness anywhere, you may as well do

it in reality.

Rationalists should bind themselves emotionally

to a lawful reductionistic universe, and direct their hopes and care into

merely real possibilities. So why not make a fun list of abilities that would

be amazingly cool if they were magic, or if only a few chosen people had them?

Imagine if, instead of the ordinary one eye, you possessed a magical second eye

which enabled you to see into the third dimension and use legendary

distance-weapons – an ability we’d call Mystic Eyes of Depth Perception. Speech

could be “vibratory telepathy”. Writing could be “psychometric tracery”. Etc.

We shouldn’t think less of them for commonality.

Science doesn’t have to be recent or

controversial to be interesting, beautiful, or worth learning. Indeed,

cutting-edge news is often wrong or misleading, because it’s based on the

thinnest of evidence (and often conveys fake explanations). By the time anything

is solid science, it is no longer a “newsworthy” headline. Scientific

controversies are topics of such incredible difficulty that even people in the

field aren’t sure what’s true. So it is better to read well-written elementary

textbooks rather than press releases. Textbooks will offer you careful

explanations, examples, test problems, and likely true information. Study the

settled science before trying to understand the outer fringes.

Breaking news in science is often controversial

and hard to understand (and have a high chance of not replicating), so why

don’t newspapers report more often on understandable explanations of old science?

Sites like Reddit and Digg do this sometimes already. Perhaps journalists

should make April 1st a new holiday called “Amazing Breakthrough

Day”, in which journalists report on great scientific discoveries of the past

as if they had just happened and were still shocking (under the protective

cover of April Fool’s Day). For example, “BOATS EXPLAINED: Centuries-Old

Problem Solved By Bathtub Nudist” (Archimedes).

Trying to replace religion with atheism,

humanism or transhumanism doesn’t work, because it would just be trying to

imitate something that we don’t really need to imitate. Atheistic hymns that

try to imitate religion usually suck. But in a world in which religion never

existed, people would still seek the feeling of transcendence; and this isn’t

something we should always avoid. A sense of awe is not exclusive to religion. Unlike

theism, space travel is a lawful dream; and humanism is not a substitute for

religion because it directs one’s emotional energies into the real universe.

It’s not just a choice of drugs: humanity actually

exists.

We value the same objects more if we believe

they are in short supply. Scarcity makes the unobtainable more desirable, and

“forbidden” information seems more important or trustworthy. This is shown by

experimental evidence (see Robert Cialdini’s ‘Influence: The Psychology of Persuasion’). Psychologically, we seek

to preserve our options (and leaping on disappearing options may have been

adaptive in a hunter-gatherer society). However, desiring the unattainable is

likely to cause frustration. And as soon as you actually get it, it stops being

unattainable. Tim Ferriss recommends that, instead of asking yourself which

possessions or status-changes would make you happy, ask which ongoing experiences would make you

happy.

Watching the birth of a child or a space

shuttle launch can inspire feelings of “sacredness”, but religion corrupts

this; religion makes the experience mysterious, faith-based, private, and

separated from the merely real – but it doesn’t have to be! Religion twists the

experience of sacredness to shield itself from criticism. Some folks try to

salvage “spirituality” from religion. But the many bad habits of thought that

have developed to defend religious and spiritual experience aren’t worth

saving. Let’s just admit we were entirely wrong, and enjoy the universe that’s

actually here.

Too few people study science, because they

think it’s freely accessible, so it doesn’t fit their need for deep secret

esoteric hidden truth. (In fact, you have to study a lot before you actually

understand science, so it’s not public knowledge.) Because it is perceived that

way, people ignore science in favor of cults that conceal their secrets, even

if those secrets are wrong. So if we want to spread science, perhaps we should

hide it in vaults guarded by mystic gurus wearing robes, and require fearsome

initiation rituals.

This parody sketch about “the Bayesian

Conspiracy” depicts how Brennan, a character in the Beisutsukai series, is inducted into the Conspiracy. After climbing

sixteen times sixteen steps, Brennan passes through a glass gate into a room

lined with figures robed and hooded in light-absorbing cloth. They chant the

names of Jakob Bernoulli, Abraham de Moivre, Pierre-Simon Laplace, and Edwin

Thompson Jaynes, who are dead but not forgotten. Brennan is asked to perform a

Bayesian calculation, and is given a ring when he finally gives the correct

answer.

›

18

|

Physicalism 201

|

This

chapter is on the hard problem of consciousness.

|

As we’ve seen, the reductionist thesis is that

we use multi-level models for computational reasons, but physical reality has

only a single level. You can see how your hand (the higher level) reduces to

your fingers and palm (the lower level). These are the same, but from different

points of view. While it is conceptually

possible to separate them, that doesn’t make it logically or physically

possible. It is silly to think that your fingers could be in one place and your

hands somewhere else. And just because something can be reduced to smaller

parts doesn’t mean the original thing doesn’t exist.

How can you reduce anger to atoms, when atoms

themselves are emotionless? Instead of professing passwords, try to understand

how causal chains in your mind compute the consequences of options, and how

this echoes the environment. But this is challenging, and the ideas of neurons,

information processing, computing etc. give us the benefit of hindsight.

Without them it is hard to understand how little bouncing billiard balls could

combine in such a way as to make something angry. But yes, even something like

anger can be reduced to atoms.

For a very long time, people had a detailed

understanding of kinetics (with concepts like momentum and elastic rebounds)

and an understanding of heat (with concepts like temperature and pressure). In

hindsight it is obvious that heat is motion, but in 1824 it was conceivable

that the two were separate. It took an extraordinary amount of work to

understand things deeply enough to make us realize that heat and motion were

really the same thing. To cross the gap, you’d have to conceive it possible for

heat and motion to be the same, and then see how the former reduces, which is hard.

As an example of “Amazing Breakthrough Day”,

one could write an article talking about how the brain has recently been

discovered by a multinational team of scientists to be made from a complicated

network of cells called neurons, which use electrochemical activities to

perform thought. This discovery indicates that mind and body are of one

substance, contrary to Descartes. The brain, as the seat of reason, could

according to Darwin be the product of a history of non-intelligent processes,

and therefore mental entities are probably not ontologically fundamental.

In the hunter-gatherer era, animism (i.e. the

belief that things like rocks and streams had spirits or minds) wasn’t

obviously stupid; if the idea were obviously stupid, no one would have believed

it. But now we know, thanks to microscopes, neuroscience, cognition as

computation, and Darwinian natural selection, that trees and rivers don’t think

or have intentions. Anthropomorphism only became obviously wrong when we

realized that the tangled neurons inside the brain were performing complex

information processing, and that this complexity arose as a result of

evolution.

Your brain is an engine that works by processing entangled evidence; this includes

thoughts themselves, because thoughts are existent in the universe (in the form

of neural patterns, i.e. the operation of brains). The facts that philosophers

call “a priori” arrived in your brain by a physical process. You might even

observe them within some outside brain. There is no “a priori truth factory”

that works without a reason. The reason why simple algorithms are more

efficient, and Occam’s Razor works, is because our simple low-entropy universe

has short explanations to be found.

The map is multi-level but reality is

single-level, and truth involves a

comparison between belief and reality. The higher levels of your map have

referents in the single level of physics. Concepts note only that a cluster

exists, and do not define it exactly. We can’t practically specify everything

in terms of quarks, so our beliefs are “promissory notes” that refer to (and implicitly exist in) empirical clusters

in what we think is the single, fundamental level of physics. Implicit

existence is not the same as nonexistence. Virtually every belief you have is

not about elementary particle fields, but this doesn’t mean that those beliefs

aren’t true. For example, “snow is white” does not mention quarks anywhere, and

yet snow nevertheless is white – it may be a computational shortcut, but it’s

still true. This view of how we can form accurate beliefs way above the

underlying reality is not circular, but self-consistent upon reflection.

Your philosophical zombie is putatively a being that is exactly identical to you,

except that it’s not conscious. Some argue that if zombies are “possible” then

we can deduce a priori that

consciousness is extra-physical. Epiphenomenal property dualists (who think zombies are possible) like David

Chalmers believe in consciousness, but also that is has no real-world effects. (They

are not the same as substance dualists like

Descartes who believe that mind-substance is causally active.) But you can be

aware of your awareness and write about it, which requires physical causality.

Whatever makes you say “I think therefore I am” causes your lips to move, and is thus within the chains of cause-and-effect

that produce our observed universe. Philosophers writing papers about

consciousness would seem to be at least one effect of consciousness upon the

world. Zombie-ists would need an entirely separate reason within physics of why people would talk about subjective

sensations. So epiphenomenalism is pointlessly complicated! It postulates a

mysterious, separate, extra-physical, inherently mental property of

consciousness, and then further postulates that it doesn’t do anything. That is

deranged. Don’t try to put your consciousness or your personal identity outside

physics.

A few more points on p-zombies: Your internal

narrative can cause your lips to say things, and consciousness is probably that which causes you to be aware of your

awareness. The reductionist position is that that-which-we-name “consciousness”

happens within physics, even if we

don’t fully understand it yet. It should be logically impossible to eliminate

consciousness without moving any atoms. It seems deranged to say that something

other than consciousness causes my internal narrative to say “I think therefore

I am” but that consciousness does exist epiphenomenally.

The argument against zombies can be extended

into a more general principle, albeit with some difficulty. The Generalized Anti-Zombie Principle (GAZP)

says that something that can’t change your internal narrative (which is caused

by the true referent of “consciousness”) can’t stop you being conscious. Any

force smaller than thermal noise won’t “switch off” your consciousness, because

it doesn’t significantly affect the true cause of your talking about being

conscious. This implies that you could, in principle, transfer your

consciousness to artificial silicon neurons.

A Giant

Lookup Table (GLUT) could in principle replace a human brain and thus seem

conscious, but a randomly generated GLUT is extremely unlikely to have the same

input-output relations as the human brain. The GAZP says that the source of a

GLUT that mirrors the brain is probably a conscious designer. When you follow

back discourse about “consciousness”, you generally find consciousness, because

it is responsible for the improbability of

the conversation you’re having. This kind of GLUT would have to be precomputed by

a human using a computational specification of a human brain.

That it is impossible to observe something is

not enough to conclude that it doesn’t exist. If a spaceship goes over the

cosmological horizon relative to us, so that it can no longer communicate with

us, should we believe that the spaceship instantly ceases to exist? We cannot

interact with a photon outside our light cone, but it continues to exist as a

logical implication of the general laws of physics, which themselves are

testable. Believing this doesn’t violate Occam’s Razor, because Solomonoff

induction applies to laws or models, not individual quarks. The photon is an

implied invisible, not an additional invisible.

Under the Minimum Message Length formalism

of Occam’s Razor (which is nearly equivalent to Solomonoff Induction), if you

have to tell someone how your model of the universe works, you just have to

write down some equations to simulate your model – not specify individually the

location of each quark in each star in each galaxy – and the amount of time it

takes to write down the equation doesn’t depend on the amount of “stuff” that

obeys it.

This is a satirical script for a short scene in

a zombie movie –but not about the folkloric lurching and drooling kind of

zombie; the philosophical kind. Imagine a world where normal people are

infected with an “epiphenomenal virus”, which cannot be experimentally

detected. Things would go on just as before. Or would they? The victims sure look conscious… but don’t let the fact

that they look like humans, talk like humans, claim to have qualia, and are

identical to humans on the atomic level, fool you. These clever scumbags are

zombies!

Is science only about “natural” things? People

say this when invoking the supernatural,

which appeals to ontologically basic mental entities like souls. But firstly,

this is a case of non-reductionism, which is a confusion because it is

incoherent. It is not clear what an irreducibly mental and fundamentally

complicated universe is even supposed to look like. Secondly, if you test

supernatural explanations the way you would test any other hypothesis (by

converting them into a reducible and natural formulation), you will probably still

find out that they aren’t true.

There is a prediction of supernatural models:

that information can be transferred “telepathically” between brains in the

absence of any known material connection between them. If psychic powers were

discovered they would be strong Bayesian evidence that non-reductionism is

correct and that beliefs are ontologically fundamental entities. But more

likely, this will not be discovered, and the reason we are tempted by

non-reductionist worldviews is that we just lack self-knowledge of our own brains’

quirky internal architecture. If naturalism is correct, then the attempt to

count “belief” or the “relation between belief and reality” as a single basic

entity is simply misguided anthropomorphism.

›

19

|

Quantum Physics and Many Worlds

|

This

chapter is on the measurement problem in physics. Yudkowsky discusses

many-worlds interpretations as a response to the Copenhagen interpretations.

Since he is not a physicist, you are free to consult outside sources to vet

his arguments or learn more about the physics examples. Note that the

Many-Worlds Interpretation is still controversial.

|

Quantum mechanics doesn’t deserve its fearsome

reputation. Quantum mechanics is considered by many to be weird, confusing or

difficult; yet it is perfectly normal reality. There are no surprising facts,

only models that are surprised by facts: the issue lies with your intuitions, not with quantum

mechanics. A major source of confusion is that people are told that quantum

physics is supposed to be mysterious, and they are presented with historical

erroneous concepts like “particles” or “waves” rather than a realist

perspective on quantum equations from the start.

What

is the stuff reality is made of? The universe isn’t made of little billiard

balls, nor waves in a pool of aether, but mathematical entities called configurations that describe the

position of particles, and amplitude

flows between these configurations (measured as square moduli of complex

numbers). A configuration can store a single value in the form of a complex

number (a + bi) where i is defined as √(-1).

These complex numbers are what we call amplitudes, and they are out there in

the territory. We cannot measure amplitudes directly, only the ratio of

absolute squares of some configurations. To find the amplitude of a

configuration, you sum up all the amplitude flows into that configuration.

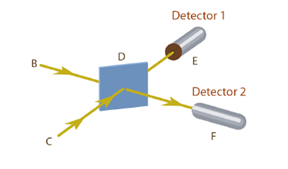

The figures above depict the

classic split-photon experiment with half-silvered mirrors. On the left, a

photon is sent from the source toward the half-silvered mirror A; this is a

configuration. We can give the configuration “a photon heading toward A” a

value of (-1 + 0i). From there, the

photon can take alternative pathways – B and C are full mirrors and D is

another half-mirror. The half-mirrors multiply by 1 when the photon goes

straight and multiply by i when the

photon turns at a right angle. The full-mirrors always multiply by i. Note that all these amplitude flows happen,

and that they can cancel each other out, which is why no photon is detected at

E. In the diagram on the right, the configuration of “a photon going from B to

D” has an amplitude value of zero (because it’s blocked), so Detector 1 now goes

off half the time.

While the previous experiment deals with one

moving particle, real configurations are about multiple particles (in fact, all

the particles in the universe). Configurations specify where particles are (e.g. “a photon here, a photon there…”), but

they don’t keep track of individual particles!

In the figure above, two

photons head toward D at the same time. But whether both photons are deflected

or both go straight through, the resulting configuration is the same. Amplitude

flows that put the same types of particle in the same places flow into the same

configuration, even if the particles came from different places. Unlike

probabilities, amplitudes can have opposite signs (positive or negative) and

thus cancel each other out (giving a squared modulus of zero for the sum),

which is how we can detect which configurations are distinct. So it is an

experimentally testable fact that “photon B here, photon C there” is the same

configuration as “photon C here, photon B there”. This is why we may see Detector

1 go off twice or Detector 2 go off

twice, but not both Detectors go off at the same time.

A configuration is defined by all particles. What

makes a configuration distinct is at least one particle in a different state –

including particles constituting the experimental equipment! Adding a sensor

that tries to “measure” things introduces a new element into the system and

thus makes it a distinct configuration. If amplitude flows alter a particle’s

state, then they cannot flow into the same configuration as amplitude flows

which do not alter it.

In the above experiment, sensitive thingy S is in a different

state between “a photon from D to E and S in state no” and “a photon from D to E and S in state yes”. By measuring the

amplitude flows, we have stopped them from flowing to the same configurations. In

this case the amplitudes don’t cancel out, leading to a different experimental

result. We find that the photon has an equal chance of striking Detector 1 and

Detector 2. (Remember, in the first diagram in “Configurations and Amplitude”,

the photon always struck Detector 2.) This phenomenon confused the living

daylights out of early quantum experimenters. But the distinctness of

configurations is a physical fact, not a fact about our knowledge, and there’s

no need to suppose that the universe cares what we think.

Macroscopic

decoherence, also known as

“many-worlds”, is the view that the known quantum laws that govern microscopic

events simply govern at all levels without alteration. Collapse postulates assume that wavefunction superposition

“collapses” at some point before reaching the macroscopic level (some say due

to conscious awareness!), leaving only one configuration with non-zero

amplitude and discarding other amplitude flows. Many Worlds proposes that configurations where we observe and don’t

observe a measurement both exist with non-zero amplitude, but are decoherent

since they are too different from each other for their amplitude flows to flow

into common configurations. But early physicists used to assume that

measurements had single outcomes. They simply didn’t think of the possibility

of more than one world, even though it’s the straightforward result of applying

the quantum laws at all levels. So they invented an unnecessary part of quantum

theory which says that parts of the wavefunction spontaneously and mysteriously

disappear when decoherence prevents us from seeing them anymore. Yet collapse

theories are still supported by physicists today.

The idea that decoherence fails the test of

Occam’s Razor is wrong as probability theory. Occam’s Razor penalizes theories

for explicit entities that cannot be summed over; but the Many-Worlds

interpretation of quantum mechanics does not

violate Occam’s Razor because decoherent worlds follow from the compact laws of

quantum mechanics. Measurements obey the same quantum-mechanical rules as all

other physical processes. Some physicists just use probability theory in a way

that is outright mathematically wrong, on the level of 2+2=3. This is one

of the reasons why Yudkowsky, as a non-physicist, dares to talk about physics.

To probability theorists, words like “simple”, “testable”

and “falsifiable” have exact mathematical meanings; e.g. we can use Bayes’s Theorem

to concentrate the probability mass of a hypothesis into narrow outcomes (falsifiability) and look for evidence

that would favor one hypothesis over another (testability). Macroscopic decoherence is falsifiable for the same

reasons quantum mechanics is, and compared to the collapse postulate, it is

strictly simpler – because decoherence is a deductive consequence of the

wavefunction’s evolution. Within the internal logic of decoherence, the many

superposed worlds are simply a logical consequence of the general laws that

govern the wavefunction, and as such, do not cost us extra probability. Adding

collapse is a useless complication.

Suppose a murder case in a big city leaves no

evidence, yet one of the police detectives says, “Well, we have no idea who did

it, but let’s consider the hypothesis

that it was Mortimer Q. Snodgrass.” This can be called the fallacy of privileging the hypothesis. Before promoting a specific

hypothesis to your attention, you need to have some rational evidence already

at hand, because it takes more evidence to narrow down the space of all

possibilities, than to figure out which

of the handful of candidate hypotheses is true. The anti-epistemology is to

talk endlessly about how you “can’t disprove” an idea, how the negative

evidence is “not conclusive”, how future evidence could confirm it but hasn’t happened yet, and so on. Single-world

quantum mechanics (i.e. collapse postulates) has no evidence in favor of it,

and there are a billion other possibilities that are no more complicated, so

it’s not worth even thinking about it. But due to historical accident, collapse

postulates are indeed spoken about.

Some people may be disturbed by the

straightforward prediction of quantum mechanics that they are constantly

splitting into zillions of other people. Egan’s

Law says: “It all adds up to normality”. The many worlds of quantum

mechanics have always been there, and they are where you have always lived –

not some strange, alien universe into which you have been thrust. But you

cannot causally affect other worlds, and decoherence has nothing to do with the

act of making decisions. Living in multiple worlds is the same as living in

one. Worrying about an extremely pleasant or awful world in your future is like

the lottery. So live in your own world. Quantum physics is not for building

strange philosophies around many-worlds, but for answering the question of what adds up to normality. If there were

something else there instead of

quantum mechanics, then the world

would look strange and unusual.

Before Hugh Everett III proposed his relative

state formulation (aka many-worlds) in 1957, none of the theories were very

good and the best quantum physicists could do was to “shut up and calculate”.

But that is not the same as claiming that “Shut up!” actually is a theory of physics, and that the

equations definitely don’t mean anything. Nevertheless, some jumped to the

conclusion that the wavefunction is only a probability.

This contributed to quantum non-realism, which is a semantic stopsign. If you

can’t say exactly what you mean by calling the quantum-mechanical equations

“not real”, then you’re just telling others to stop asking questions. The

equations do describe something – the

quantum world is really out there in the territory and the classical world

exists only implicitly within the quantum one (at least from the realist

perspective).

If wavefunction collapse actually happened, it

would be the only informally specified (qualitative), non-linear, non-unitary,

non-differentiable, discontinuous, non-local in the configuration space,

acausal (non-deterministic), and superluminal (faster than light) law in

quantum mechanics, and the only fundamental phenomenon to violate CPT symmetry,

Special Relativity and Liouville’s Theorem, and be inherently mental. It would

be the only fundamental law adopted without precise evidence to nail it down.

If early physicists like Niels Bohr had never made the mistake, and thought

immediately to apply the quantum laws at all levels to produce macroscopic

decoherence, then “collapse postulates” would today seem like a completely

crackpot theory. There would be many better hypotheses proposed to explain the

mysterious Born probabilities.

Early quantum physicists made the error of

forgetting that they themselves were made of atoms, so they concluded that conscious observation had a fundamental

effect. They didn’t notice that a quantum theory of distinct configurations

already explained the experimental result, without any need to invoke

consciousness. In retrospect, could philosophers have told the physicists that

this was a big mistake? Philosophical insight would not have helped them,

because it’s usually science that

settles a confusion. That’s why we don’t usually see philosophers sponsoring

major advances in physics. At the frontier of science, it takes intimate

involvement with the scientific domain in order to do the effective

philosophical thinking.

Some people think that free will and

determinism are incompatible. If the laws of physics control everything we do,

then how can our choices be meaningful? But “you” and physics are not competing

causal nodes; you are within physics!

Your desires, plans, decisions and actions cannot determine the future unless we live in a lawful, orderly

universe.

Things should not seem like

the causal network on the left, but the one on the right. If the future were

not determined by reality, it could not be determined by you. Yudkowsky calls

this view “Requiredism”: that planning, agency, choice etc. require some lawful determinism.

Anything you control is necessarily controlled by physics.

If collapse theories (or any theory of a

globally single world) were true, they would violate Special Relativity; and

although Special Relativity seems counterintuitive to us humans, what it really

says is that human intuitions about space and time are simply wrong. Given the

current state of evidence, the “many-worlds interpretation” (i.e. macroscopic

decoherence) wins outright. There is no reason to suppose that quantum laws are

different on the macroscopic level, so it seems obvious that there are other

decoherent Earths. You shouldn’t even ask,

“Might there only be one world?” The argument should have been over fifty years

ago. New physical evidence could reopen it, but we have no particular reason to

expect this. The main problem for Many Worlds is to explain the Born

probabilities.

›

20

|

Science and Rationality

|

This

chapter relates the ideas of previous ones to scientific practice. So if it

was many-worlds all along, and collapse theories are silly, did physicists in

the first half of the 20th century really screw up that badly? How

did they go wrong, and what lessons can we learn from this whole debacle?

|

This is another short story set in the same

universe as “The Ritual” and “Initiation Ceremony”. Future physics students

look back on the cautionary tale of quantum physics. Einstein, Schrödinger, and

Von Neumann failed to see Many Worlds, perhaps because of administrative

burdens imposed by a system of science that thought it acceptable to take 30+

years to solve a problem, rather than resolving a major confusion faster. Eld Science was based on getting

to the truth eventually… but people

can think important thoughts in far less than thirty years if they expect speed

of themselves.

The failure of physics in the first half of the

20th century was not due to straying from the scientific method,

because the physicists who refuse to adopt many-worlds are obeying the rules of Science. Science and rationality (i.e.

Bayesianism) aren’t the same thing. The explanation of quantum mechanics was

meant to illustrate (among other things) the difference between the scientific method,

which demands new testable predictions, and Bayesian probability theory, which

suggests that macroscopic decoherence is simpler than collapse. Science says

that many-worlds doesn’t make new testable predictions because we can’t see all

the other worlds; Bayes says that the simplest quantum equations that cover all

known evidence don’t have a special exception for human-sized masses. This is a

clear example of when it comes time to break your allegiance to Science.

The social process of Science doesn’t trust the

rationality of individual scientists, which is why we give them the motive to

make falsifiable experimental predictions. But the rational answer comes from Bayes’s Theorem and Solomonoff

Induction. Science doesn’t always agree with the exact, Bayesian, rational

answer, and Science wants you to go out and gather overwhelming experimental

evidence, because it doesn’t trust you

to be rational. It assumes that you’re too stupid and self-deceiving to just

use Solomonoff induction.

Science doesn’t care if you waste ten years on testing

a stupid theory, as long as you recant and admit your error. But some things (e.g.

cryonics) cannot be experimentally tested right now despite huge future

consequences; so you have to think rationally to figure out the answer. You

should not automatically dismiss such theories. You have to try to do the thing

that Science doesn’t trust you to do, and figure out the right answer before you get clubbed over the head

with it. Evolutionary psychology is another example of a case where rationality

has to take over from science.

Your private epistemic standard should not be

as lax as the ideal of Science, which asks only that you do the experiment and

accept the results. Science lets you believe any stupid idea that hasn’t been

refuted by experiment, and it lets people test whatever hypotheses they like,

as a social freedom. Science accepts slow, generational progress. But you need

Bayes (and Tversky & Kahneman) to tell you which hypotheses to test and precisely how much probability to

assign to them. Bayesianism says that there is always an exactly rational

degree of belief given your current evidence, and this does not shift a

nanometer depending on your whims.

It seems that scientists are not trained in

precise rational reasoning on sparse evidence (e.g. the formal definition of

Occam’s Razor, the conjunction fallacy, or the concepts of “mysterious answers”

and “fake explanations”). These are not standard. Hence why modern world-class

scientists, like Sir Roger Penrose, still make mistakes like thinking that

consciousness is caused by quantum gravity. They were not warned to be stricter

with themselves. Maybe one day it will be part of standard scientific training,

but for now it’s not, and the absence is visible.

You can’t think and trust at the same time. To

grow as a rationalist, you must lose your emotional trust in the sanity of

normal folks. You must lose your trust that following any prescribed pattern

will keep you safe. Not even Science or Eliezer can save you from making

mistakes. There is no known procedure you can follow that makes your reasoning

defensible. Since the social rules of Science are verbal rather than

quantitative, it is possible to believe you are following them; but with

Bayesianism, it is never possible to do an exact calculation and get the exact

rational answer that you know exists. You are visibly less than perfect. So

learn to live with uncertainty while still having something to protect and

striving to do better.

Yudkowsky is less of a lonely iconoclast than

he seems, as many of these ideas are surprisingly conventional and are being

floated around by other thinkers (e.g. Max Tegmark and Colin Howson). Perhaps

Popper’s falsificationism should be replaced with Bayesianism. But this is not

as simple as redefining science. It would require not just teaching probability

theory, but also things like cognitive biases and social psychology. We need to

form a new coherent Art of Bayescraft

before we are actually going to do any better in the real world than modern

science.

Whether scientists accept an idea depends not

just on epistemic justification, but also a social pack and extra evidence to

overcome cognitive noise – hence why Science is inefficient at reaching

conclusions. And Science doesn’t say which

ideas to test. Yet the bulk of work in progressing knowledge is in

elevating the right hypothesis to attention. Hence Bayesianism is faster than

science. Ironically, science would be stuck if there weren’t some people who could get it right in

the absence of overwhelming experimental proof, because in many answer spaces

it’s not possible to find the true hypothesis by accident.

Albert Einstein is an example of an individual

scientist who, in the presence of only a small amount of experimental evidence,

was unusually good at arriving at the truth faster than the social process of

Science (and even more unusually, he admitted it). Deciding which ideas to test

can entail high-minded thoughts like noticing a regularity in the data, or

inferring a new law from characteristics of known laws (as Einstein did with relativity).

Einstein used the data he already had more efficiently – in his armchair. It’s

possible to arrive at the right theory this way, but it’s a lot harder.

Einstein used evidence much more efficiently

than other physicists, but he was still extremely inefficient in an absolute sense. Imagine a world where

the average IQ is 140 and a huge team of physicists and cryptographers was

examining an interstellar transmission; going over it bit by bit for thirty

years, they could deduce principles on the order of Galilean gravity just from

seeing two or three frames of a picture. But a Bayesian superintelligence would make much more efficient use of sensory

data, such that it could invent Newtonian mechanics the instant it sees an

apple fall.

On the scale of intelligence, the distance

between Einstein and “village idiot” is tiny compared to the distance between

Einstein and a Bayesian superintelligence. In other words, it looks not like

this:

But more like this:

Yudkowsky was disappointed

when his childhood hero, Douglas Hofstadter, disagreed. Perhaps this is a “cultural

gap” explained by Yudkowsky reading a lot of science fiction from a young age. This

was helpful for thinking beyond the human world. That is why he looked up to

the ideal of a Bayesian superintelligence, not Einstein. The ideal role model

you should aim for should come from within your dreams, because by letting your

ideals be composed only of dead humans, you limit yourself to what has already

been accomplished, and you will ask too little of yourself.

People talk as if Einstein had magical

superpowers or an aura of destiny. There’s an unfortunate tendency to talk as

if Einstein, even before he was famous, had a rare inherent disposition to be

Einstein. But Einstein chose an important problem, had a new angle of attack,

and persisted for years. An intelligent person under the right circumstances can do better than Einstein. (Not

everyone, but many have potential.) The way you acquire superpowers is not by

being born with them, but by seeing with a sudden shock that they are perfectly

normal. What Einstein did isn’t magic; just look at how he actually did it!

In another story from the Beisutsukai series, Brennan and the other students are faced with

their midterm exams, and are given one month to develop a theory of quantum

gravity. Einstein was too slow to formulate General Relativity (taking ten years),

and his era lacked knowledge of cognitive biases and Bayesian methods. It

should be possible to do better, if you expect it. An open challenge in science

is quantum gravity, which is the question of how to unify General Relativity

and quantum mechanics.

›

Interlude: A Technical Explanation of Technical

Explanation

YOU’VE SEEN THE Intuitive Explanation of Bayesian reasoning, but when do the

mathematical theorems apply and how do we use the theorems in real-world

problems? Is there a controversy? We begin by asking: What is the difference

between a technical

understanding and

a verbal understanding?

One visual metaphor for

“probability density” or “probability mass” is a lump of clay that you must

distribute over possible outcomes. This lets you visualize how probability is a

conserved resource – to assign higher probability to one hypothesis requires

stealing some clay from another hypothesis. This matters when you have to bet

money, because by not being careful with your bets, you will not maximize your

expected payoff.

Imagine there is a little

light that flashes red, green, or blue each time you press a button. You have

to predict the color of the next flash and you can bet up to one dollar. If the

game uses a proper scoring rule, then

if the actual frequencies of the lights are 30% blue, 20% green and 50% red,

you maximize your average payoff by betting 30 cents on blue, 20 cents on green

and 50 cents on red. If you press the button twice in a row, you get the same

score regardless of whether you are scored once for your prediction P(green1

and blue2) or twice for

P(green1) and P(blue2|green1).

In Bayesian terms this is

known as an invariance. Note that

P(green1) x P(blue2|green1) = P(green1

and blue2). So it doesn’t

matter whether we consider it two predictions or one prediction; we get the

same result. Mathematically, the scoring rule would be: Score(P) = log(P);

meaning that your score is the logarithm of the probability you assigned to the

winner. And your expected score would be:

This is different from the colloquial way

of talking about degrees of belief.

When people

say “I am 98%” certain…” what they usually mean is “I’m almost but not entirely

certain”, which reflects the strength of their emotion rather than the expected

payoff of betting 98% of their money on that outcome. But technically, your

confidence would be poorly calibrated if

you said that you were “98% sure” but you get more than two questions wrong out

of a hundred independent questions of equal difficulty. The Bayesian scoring

rule rewards accurate calibration.

But calibration is just one component; the other component is discrimination. This refers to

discriminating between right and wrong answers: the more probability you assign

to the right answer, the higher your score. You can be perfectly calibrated by

saying “50% probability” for all yes/no questions, but this is merely

confessing ignorance, and you can do better.

Now imagine

an experiment which produces an integer result between zero and 99. You could

predict “a 90% probability of seeing a number in the fifties”, which is a vague

theory compared to “a 90% probability of seeing 51”. The precise theory has an

advantage because it concentrates its probability mass into a sharper point. So

if we actually see the result 51, this is evidence in favor of the precise

theory. If the prior odds were 10:1 in favor of the vague theory, seeing a 51

would make the odds go to 1:1 and seeing a 51 again would then bring it to 1:10 in favor of the precise theory.

However, the vague theory

would still score better than a hypothesis of zero knowledge (or maximum-entropy, which makes any result

equally probable). Even worse than

the ignorant theory would be a stupid theory which predicts “a 90% probability

of seeing a result between zero and nine”, and thus assigns 0.1% to the actual

outcome, 51. By making confident predictions of a wrong answer, it is thus

possible to have a model so bad that it is worse than nothing. Ignorance is

better than anti-knowledge.

Under the laws of probability theory, it is not possible for both A and not-A to be evidence

in favor of B, so a true Bayesian can only test

ideas of which they are genuinely uncertain; they cannot try to prove a

preferred outcome or to prevent disproof. Unfortunately, human beings are not

Bayesians. People don’t distribute conserved probability mass over advance

predictions, but try to argue that whatever event did happen “fits” the

hypothesis they had in mind beforehand. The consequence of this is that people

miss their chance to realize that their models did not predict the phenomenon.

So when a class’s physics students observe a square plate of metal next to a hot radiator, and

feel that the side next to the radiator is cool and the distant side is warm,

the students may guess that “heat conduction” is responsible. This is a vague

and verbal prediction. If they had measured the heat of the plate at different

points at different times and applied equations of diffusion and equilibrium,

they would soon see a pattern in the numbers, and their sharp predictions might

lead them to guess that the teacher turned the plate around before they entered

the room.

You now have a technical

explanation of the difference between a verbal explanation and a technical

explanation, because you can calculate exactly how technical an explanation is.

Bayesian probability theory gives you a referent for what it means to “explain”

something. Other technical subjects (like physics, computer science, or

evolutionary biology) permit this too – which is why it is so important that

people study them in school. And as long as you can apply the math, and

distinguish truth from falsehood, you are allowed to have fun and be silly.

A useful

model is knowledge you can compute in reasonable time to predict real-world

events you know how to observe. This is why physicists use different models to

predict airplanes and collisions in a particle accelerator, even though the two

events take place in the same universe with the same laws of physics. A Boeing

747 obeys Conservation of Momentum in real life, even if some aerodynamic

models, which are cheap approximations, violate Conservation of Momentum a

little. As long as the underlying fundamental

physics supports the aerodynamic model, it can be a good approximation. Even a

“vague” theory can be better than nothing, and given enough experiments, vague

predictions can build up a huge advantage over alternate hypotheses. Such

theories, if they produce not-precisely-detailed but still correct predictions,

may be called “semitechnical” theories.

But aren’t precise, quantitative theories still better than vague, semitechnical theories? Well,

in the nineteenth century, Darwinian evolutionism was a semitechnical theory

(they did not yet have quantitative models) but physics was already precise and

mathematical. The physicists said that the Sun could not have been burning for

that long, so people like Lord Kelvin challenged natural selection. Of course,

evolution turned out to be correct. Nineteenth century physics was a technical

discipline, but it was incomplete – they didn’t know about nuclear reactions.

The lesson is that every correct

theory about reality must be compatible with every other correct theory, and if

there seems to be a conflict between two well-confirmed theories, then you are

applying one of the two theories incorrectly or applying it outside the domain

it predicts well.

The social process of Science requires you to make advance predictions. This custom exists to

prevent human beings from making human mistakes. But the math of probability

theory does not distinguish between advance predictions and post facto ones,

which is why nineteenth century evolutionism still worked. Today, evolutionary

theory can make quantitative predictions about DNA and genetics, and as a technical theory it is far better than a

semitechnical theory. But

controversial theories at the cutting-edge of science are often semitechnical,

or even nonsense.

The discipline of rationality is very important for distinguishing a good semitechnical

theory (truth) from nonsense (falsehood). But scientific controversies should

matter only if you are an expert in the field, or if it affects your life right

now. For the rest of us, elementary textbook

science shows the comprehensible beauty of settled science. If you do have a

reason for following a scientific controversy, then you should pay attention to

the warning signs that historically distinguished vague hypotheses that turned

out to be gibberish, from those that later became confirmed theories.

A sign of a poor hypothesis

is that it expends great effort in avoiding falsification. In terms of Bayesian

likelihood ratios, falsification is stronger than confirmation. As Popper

emphasized, the virtue of a scientific theory lies not in the outcomes it

permits, but the outcomes it prohibits. The same Bayesian scoring rule we saw

earlier can be used to accumulate scores across experiments, which we should

strive to maximize. The only mortal sin of Bayesianity

is to assign probability 1 or zero to an outcome, because this is like

accepting a bet with a payoff of negative infinity.

By making

predictions in advance, it is easier to notice when someone is using too much

probability mass to try to claim every possible outcome as an advance

prediction. Imagine waking up one morning to find that your left arm has been

replaced by a blue tentacle. How would you explain this? Well, you wouldn’t

because it’s not going to happen. There are verbal explanations (like divine

intervention, or hallucination) that could “fit” the scenario, but such

explanations can “fit” anything. If aliens did it, why would they do that

particular thing to you as opposed to the other billion things they might do?

What will the aliens do tomorrow? If you guess a model with no internal detail

or a model that makes no further predictions, why would you even care? People

play games with plausibility, explaining events they expect to never actually

encounter.

If you

had a “good explanation” for the hypothetical experience, then you would go to

sleep worrying that your arm really would

transform into a tentacle. Under Bayesian probability theory, probabilities are

anticipations: if you assign probability mass to waking up with a blue

tentacle, then you are nervous about waking up with a blue tentacle. To explain

is to anticipate, and vice versa. If you don’t anticipate waking up with a

tentacle, you need not bother crafting excuses you won’t use. Remember: since the beginning, not one unusual thing

has ever happened.

›

Next: The fifth (and penultimate) book of rationality

Loved it, compelling and throughlly enjoyable

ReplyDeleteIt's fascinating how interconnected different aspects of reality truly are.

ReplyDelete