Four Strands of Everything

Book Review:

David Deutsch, "The Fabric of Reality", Penguin Books, 1998.

According to David Deutsch, science is not about attaining a collection of facts or predictions, but about explaining and understanding the fabric of reality. Despite the fact that the growth in our knowledge has led to the specialization of subjects into sub-fields as theories become broader, it is also true that at the same time, our theories have been superseded by deeper ones that simplify and unify the existing ones -- and Deutsch argues that depth is winning over breadth. In other words, we are heading toward a situation where one person would be able to understand "everything that is understood", thanks to a couple of deep, fundamental theories that contain within them the explanations of all subjects. We will have a Theory of Everything, and Deutsch is confident that he has a candidate for such a theory with his "four main strands" that together form a coherent explanatory structure: the theory of quantum physics, the theory of knowledge (epistemology), the theory of computation, and the theory of evolution. The world-view that Deutsch arrives at is not reductionist. He writes:

"The fabric of reality does not consist only of reductionist ingredients like space, time and subatomic particles, but also of life, thought, computation and the other things to which those explanations refer. What makes a theory more fundamental, and less derivative, is not its closeness to the supposed predictive base of physics, but its closeness to our deepest explanatory theories." (p. 28)Deutsch emphasizes that the ideas of Hugh Everett (quantum theory), Karl Popper (epistemology), Alan Turing (computation) and Richard Dawkins (evolution), to whom his book is dedicated, have not been taken seriously enough. One reason is that these theories have counter-intuitive implications, and another is that, when each of these four theories are taken individually, they seem narrow, cold, inhuman, pessimistic, and reductionist. As a result, Turing, Everett, Popper and Dawkins have been forced to defend their theories against obsolete rivals, which is unfortunate because (a) they could have better expended their intellectual energies on developing their fundamental theories further, but also because (b) their theories were already accepted (ironically) as the prevailing theories in their respective fields for use in practice -- just not as explanations of reality. The multiverse form of quantum theory is used by the majority of researchers in the fields of quantum cosmology and quantum computation, but outside these two branches, most physicists have an attitude of "pragmatic instrumentalism" (using the theory's equations for prediction without knowing or caring what they mean). Likewise, the Turing principle is the prevailing fundamental theory of computation, yet many folks still doubt that a true artificial intelligence (with consciousness) is possible even though the principle says it is. No serious biologist doubts Darwinian evolution by natural selection as a pragmatic truth, yet some do find it unsatisfactory as a full explanation of the adaptations in our biosphere. And Popper's epistemology is used every time a scientist decides to run an experiment to test a hypothesis, but few people incorporate the Popperian explanation of why this works into their world-view (perhaps because they yearn for an inductive principle of justification). What the four theories have in common is that they have displaced their predecessors in a pragmatic sense without being accepted as fundamental explanations of reality -- which means that to take them seriously would essentially amount to saying, "the prevailing theory is true after all!" And that is what Deutsch says regarding his unified theory of the fabric of reality. Moreover, the combination of the four strands eliminates the explanatory gaps of the individual theories:

"Far from denying free will, far from placing human values in a context where they are trivial and insignificant, far from being pessimistic, it is a fundamentally optimistic world-view that places human minds at the centre of the physical universe, and explanation and understanding at the centre of human purposes." (p. 342)How did Deutsch arrive at this conclusion? Well, to explain how the four strands are interdependent, and the above quote, I first need to explain each one on its own. But it should be kept in mind that the scientific process does not, according to Deutsch, follow Thomas Kuhn's idea of a paradigm revolution instigated by a group of young iconoclasts against the establishment's old fogies. While it is true that humans have biases and vested interests that make it hard to switch to a new fundamental theory, it is not impossible to see the merits of another paradigm or to switch paradigms, and in reality scientists and academics are open to criticism and amenable to reason. This means that the four theories became the prevailing ones of their subject-matters because they were objectively better than the previous explanations.

***

Quantum physics

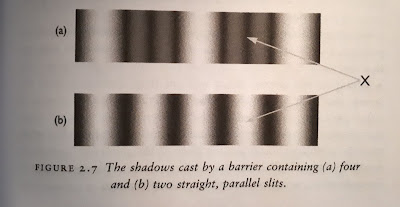

The first chapter on quantum physics, chapter 2, is titled "Shadows", which refers to "shadow particles" -- the author's own term for particles in other universes, in contrast to the tangible particles of this universe. Other universes?!? Well, if we conduct a double-slit experiment with photons we see a pattern of shadows like the one shown in (b) in the figure below, due to the fact that light "bends" and "frays".

However, by adding two more slits, what we see is that something has interfered with the light that used to arrive at point X in the figure. Using careful reasoning and evidence, Deutsch explains that whatever is causing the interference must be in the light beam; it can penetrate whatever light can, but is obstructed by anything that obstructs light; it is reflected by anything that reflects light; and so on. But we know that the photons do not split into fragments, nor do they deflect each other... so the interfering entities must be other photons, except that they cannot be seen (hence why Deutsch likes to call them shadow photons). We know they exist only indirectly through interference phenomena. We can also infer that, to explain the shadow pattern when we send a single photon, there must be many more (at least a trillion times as many, in Deutsch's estimate) shadow photons as there are tangible ones. Moreover, the same thing applies to any kind of particle, not just particles of light. Therefore, according to Deutsch:

"It follows that reality is a much bigger thing than it seems, and most of it is invisible. The objects and events that we and our instruments can directly observe are the merest tip of the iceberg. Now, tangible particles have a property that entitles us to call them, collectively, a universe. [...] For similar reasons, we might think of calling the shadow particles, collectively, a parallel universe, for they too are affected by tangible particles only through interference phenomena." (p. 45)The collection of all the parallel universes together, in other words the whole of physical reality, is referred to as the multiverse. Each universe can affect the other ones only very weakly through interference. Each particle is "tangible" in one universe and "intangible" in all the others. It is important to note that the parallel universes are not "possibilities" -- something that is possible but nonexistent cannot deflect a real photon from its path; the interference must be caused by actual entities. The physicist Hugh Everett was the first (in 1957) to argue that quantum theory describes a multiverse, yet even today there is still a debate over how to interpret the implications of quantum theory, and many physicists use quantum theory only instrumentally in order to predict the outcomes of experiments.

Jumping to chapters 11 and 12, we examine the concept of time, which Deutsch argues was "the first quantum concept". Our everyday, common-sense conception of time is that time is like a line on which the present moment is a continuously moving point; hence the "flow" of time. However, this theory of time is nonsensical. The sweeping motion of "now" cannot also be regarded as a fixed moment as it is often shown in diagrams. That is because, as the author writes, "Nothing can move from one moment to another. To exist at all at a particular moment means to exist there for ever." No moment is objectively the present, and we do not move through time. Instead, what happens is that the universe changes and we experience "differences between our present perceptions and our present memories of past perceptions." Yes, physical objects do change with respect to time, but time itself (and therefore "now") does not change with respect to some meta exterior time, because there is no such thing.

Psychologically, we find it handy to think in terms of both (a) the moving present, and (b) a sequence of unchanging moments. But in physical reality we live in a "block universe", i.e. a static four-dimensional entity of spacetime (in both Newtonian physics and Einstein's theory of relativity). Nothing moves relative to spacetime, and what we consider "moments" or "snapshots" are slices through spacetime. However, doesn't this mean that the future is closed and therefore that we have no free will? Indeed, spacetime physics does not even accommodate cause-and-effect or counterfactual statements. So at this point Deutsch rejects spacetime physics in favor of the quantum multiverse, which does allow us to make statements like: "if Faraday had died in 1830, then X would have happened". Here, the multiverse is almost like a collection of interacting spacetimes, wherein a particular snapshot of one universe is partially determined (through interference) by other universes. But this is just an approximation, and it is not true that a snapshot from one universe happens "at the same moment" as a snapshot from another universe (because again there is no overarching framework of time outside the multiverse). This implies that there is no fundamental distinction between snapshots of other times in our universe, and snapshots of other universes. Therefore, as Deutsch concludes: "other times are just special cases of other universes." This quantum conception of time lets us talk about the proportion of universes in the multiverse that have a certain property. While the multiverse itself is deterministic, within one universe we as observers can only make subjective probabilistic predictions -- in essence this is because information is "distributed" across different universes. Nevertheless, due to the fact that universes different from this one exist, and exist in different proportions, it can be objectively true that "property X is a cause of Y", and that relative to an observer, the future is open (because from his/her point of view it has multiple possible outcomes).

After David Deutsch has presented the ideas that there are parallel universes and that time does not flow, you may think it cannot get weirder. Yet it does, for he also attempts to understand the theory of time travel. This chapter is hard to follow, so I won't summarize it too extensively. To be clear, Deutsch does not argue that time travel is practically feasible. What he argues is that it is not paradoxical in theory. Famously, the "grandfather paradox" imagines that one travels into the past to kill one's grandfather before he had children, thus preventing oneself from traveling into the past in the first place. However, according to the multiverse view of time travel, you would basically travel to the past of a parallel universe identical to yours (but you cannot change the snapshots you remember being in, because again, there is no flow of time). Because counterfactuals are possible under multi-universe quantum physics, you could still "change the past" by choosing "to be in a different snapshot from the one [you] would have been in if [you] had chosen differently". As to whether such time travel is possible, we would first need a quantum theory of gravity (which nobody has formulated yet). Even if it is possible, it would be subject to limitations, for example (i) a time machine cannot provide pathways to times earlier than the moment at which it came into existence; and (ii) the way in which two universes would become connected would depend on the time-traveling participants' states of mind (e.g. you cannot visit a universe where your copy does not intended to use a time machine). Of course, future-directed time travel is another matter entirely, and is simpler -- just get a fast enough space rocket.

Epistemology

In chapter 3, Deutsch presents science as a process of problem-solving, wherein the "problems" are usually the flaws in our existing explanations of reality, and the solutions come in the form of better theories (which themselves may have problems). A schema of the process is as follows:

- Problem

- Conjectured solutions

- Criticism, including experimental tests

- Replacement of erroneous theories

- New problem

"We never draw inferences from observations alone, but observations can become significant in the course of an argument when they reveal deficiencies in some of the contending explanations. We choose a scientific theory because arguments, only a few of which depend on observations, have satisfied us (for the moment) that the explanations offered by all known rival theories are less true, less broad or less deep." (p. 69).Out of the four strands, this is actually the one I personally have most issues with. When it comes to epistemology, David Deutsch completely neglects the Bayesian perspective on inference, which says that we can raise our confidence that a hypothesis is true when we observe evidence that is more likely to exist if the hypothesis is true than if it is false. Moreover, Bayes's Theorem tells us to integrate the evidence with the prior probability of the hypothesis, which means that you have to consider previous observations. So yes, while we cannot be certain that our theories are true, they are in some sense justified by observations (in the sense that a theory may have a higher posterior probability than any of its main rivals). Interestingly, there are other physicists who embrace Bayes, such as Sean Carroll -- but of course it is legitimate to ask whether Deutsch's focus on the process of science means that it is not fair to compare him to others who focus on more general ways of knowing; after all, science is not the same thing as rationality.

That being said, the discussion continues in chapter 4 of The Fabric of Reality, which asks how our brains can draw conclusions about external reality (assuming that solipsism is false). The answer Deutsch gives has to do with the idea that physical objects "kick back" when we kick them; this is inspired by a scene from J. Boswell's "Life of Johnson" wherein Dr Johnson kicks a large rock. In other words, an entity is real and exists if "according to the simplest explanation, [the] entity is complex and autonomous" (p. 96). Incidentally this is also why shadow photons are real -- they kick back by interfering with visible photons. In addition to this criterion, we also prefer explanations that are simpler (see Occam's Razor) yet simultaneously account for greater detail and complexity in phenomena. This complexity can be phrased in computational terms, which is the bridge between the theory of knowledge and the theory of computation.

But before diving into computation, we briefly jump to chapter 10 which discusses "The Nature of Mathematics". The main thesis here is that the reliability of mathematical arguments is contingent on physical and philosophical theories, and therefore mathematics cannot provide absolute certainty (contrary to what mathematicians would like to think). Deutsch rejects the traditional hierarchy of mathematical > scientific > philosophical arguments, and he also rejects the Platonic idea that abstract forms are "even more real" than the physical world. Instead, we must realize that the conclusions we logically deduce are no more secure than the assumptions we start with; and while the abstract entities that mathematical proofs refer to are real (because they are complex and autonomous), our knowledge of those entities is derivative from our knowledge of physics. According to Kurt Gödel's Incompleteness Theorem, no set of rules of inference can validate a proof of its own consistency, and even if a set of rules of inference were consistent, it would fail to designate some valid method of proof as valid. Therefore, "there will never be a fixed method of determining whether a mathematical proposition is true" (p. 235), and "progress in mathematics will always depend on the exercise of creativity" (p. 236). Science is about explaining and understanding the physical world, and mathematics is about explaining and understanding the world of mathematical abstractions -- but as Deutsch notes, mathematical intuition is a species of physical intuition.

There is one more chapter about epistemology (chapter 7) that seems shoehorned into the book, and is different from the rest of the book in that the bulk of it is in dialogue format. Here, the character David (standing in for the author) argues against a "crypto-inductivist", who believes that the invalidity of inductive justification is a problem for the foundations of science and that we need some way to justify our reliance on scientific theories. David argues that we can be rationally justified (if only tentatively so) in relying on the predictions of a theory if the theory provides a good explanation, or is preferable to all rival explanations. And this depends not purely on the evidence, but on argument -- hence Popper's emphasis on "rational controversy" and the "problem-situation". A theory is only corroborated relative to other contending/rival theories. And because theories are meant to solve problems, we should be wary of postulates that solve no problems, and theories that postulate anomalies without explaining them. Insofar as the assumption "the future resembles the past" does not imply anything specific about the future and does not justify any prediction about the future (because it applies to any theory), there is no principle, process, or problem of induction. So, David concludes, Popper indeed solved the "problem of induction". Needless to say, my earlier criticism applies here too.

Theory of computation

One of the fascinating things about The Fabric of Reality is the prominent role played by the concept of virtual reality (VR) in the book. Deutsch uses VR as an illustration of universality -- the idea that it is possible to build a computer that can mimic the computations of any other computer. A true virtual reality machine would be able to give us the experience of being in any specified environment, including the sensory experiences. Of course, VR can generate only logically possible, "external" experiences (for example the experience of piloting an aircraft or flying faster than the speed of light) but not logically impossible (e.g. factorizing a prime number) or "internal" (e.g. experiencing colors outside the visible range) experiences. Nevertheless, such a machine would be reminiscent of Descartes's "evil demon". Deutsch envisions a VR generator consisting of a set of sensors (to detect what the user is doing), a set of nerve-stimulating (i.e. image generating) devices, and most importantly, a computer in control.

The book was first published in 1997, which explains why the computer in the figure looks like that. In any case, the main challenge for VR will be to program the computer to render various environments. This relates to epistemology and physics, because the "program in a virtual-reality generator embodies a general, predictive theory of the behavior of the rendered environment" (p. 117). But here comes the more surprising part: what normally goes on inside our brains is in many ways like a virtual-reality rendering! As Deutsch writes:

"We realists take the view that reality is out there: objective, physical and independent of what we believe about it. But we never experience that reality directly. Every last scrap of our external experience is of virtual reality. And every last scrap of our knowledge -- including our knowledge of the non-physical worlds of logic, mathematics and philosophy, and of imagination, fiction, art and fantasy -- is encoded in the form of programs for the rendering of those worlds on our brain's own virtual-reality generator." (p. 121)The following chapter continues by discussing the ultimate limits of computation. Is it possible, for every logically possible environment, to program the generator to render that environment? Well, in principle a VR generator could employ various technological tricks (such as slowing down the processing speed of the user's brain) to render complicated environments. But it would not be able to render the set of all logically possible environments, because "there are infinite quantities greater than the infinity of natural numbers" -- this is essentially Georg Cantor's diagonal argument. In other words, what the argument says is that it is always possible to program the generator to render an environment that is recognizably different from any other environment listed in the machine's repertoire -- even if we already have an infinitely long list of possible programs! According to Deutsch, these are environments we cannot go to using the VR generator; hence the name "Cantgotu environments" (another term invented by the author, in reference to Cantor, Gödel and Turing). So how large can our VR repertoire be? We know that it is possible (in theory) to build a universal virtual-reality generator, thanks to the Turing Principle, which says in its various forms:

- "There exists an abstract universal computer whose repertoire includes any computation that any physically possible object can perform."

- "It is possible to build a universal computer: a machine that can be programmed to perform any computation that any other physical object can perform."

- "It is possible to build a virtual-reality generator whose repertoire includes that of every other physically possible virtual-reality generator."

- "It is possible to build a virtual-reality generator whose repertoire includes every physically possible environment."

Chapter 9 explains that quantum computers exploit quantum interference, which makes them different from classical (i.e. Turing-inspired) computers. According to Deutsch, quantum computers distribute "components of a complex task among vast numbers of parallel universes" (p. 195), although standard explanations of quantum computing make reference to the idea of superposition rather than parallel universes. Nevertheless, there are implications for computational complexity theory: computational tasks have traditionally been classified into those that are tractable (i.e. their resource requirements, such as time, do not increase exponentially with the number of digits in the input) and those that are intractable, but quantum computation would allow us to render environments that are classically intractable. In practice, this would pose a challenge for RSA cryptography, which relies on the intractability of factorizing large numbers for its security... yet it would also enable unbreakable quantum cryptography (assuming we overcome the hurdles of practical implementation). I also wonder what the implications are for algorithms to live by.

Theory of evolution

Compared to the other three strands of the fabric of reality, evolution gets relatively little space in the book. However, Deutsch's treatment of the subject here (Chapter 8) is in a way very humanistic. While modern science, including biology, seems to indicate that life is not a fundamental phenomenon of nature and that we are merely molecular replicators governed by the same laws of physics as inanimate matter, Deutsch argues that life is (a) a manifestation of virtual reality and therefore linked to the Turing principle; and is (b) significant in the universe because the future of life will determine the future of stars and galaxies. Life may be considered "fundamental" because we need to understand it if we hope to have a deep understanding of the world. Even if we limit ourselves to the physical world, living organisms (on Earth) are based on replicators called genes (which are comprised of chains of adenine, cytosine, guanine and thymine molecules) that are essentially computer programs that cause a niche environment to make copies of them... and as such, an organism is "a virtual-reality rendering specified by its genes", whereby the accuracy of the rendering corresponds to the genes' degree of adaptation to their niche. Genes thus embody knowledge about their niches, and lead to the survival of knowledge. Genes are the means through which the Turing principle is implemented in nature, and knowledge may be considered fundamental due to its astrophysical effects -- for example, our descendants might be able to use their knowledge of stars to prevent the Sun from becoming a red giant. Whether or not they succeed, the point is that the Sun's future depends on what happens to intelligent life on Earth. And it gets more impressive when we consider the multiverse perspective:

"Where there is a galaxy in one universe, a myriad galaxies with quite different geographies are stacked in the multiverse. [...] But nothing extends far into other universes without its detailed structure changing unrecognizably. Except, that is, in those few places where there is embodied knowledge. In such places, objects extend recognizably across large numbers of universes. [...] the largest distinctive structures in the multiverse." (p. 192)Now we can see how the four strands relate to each other.

***

Each of the four theories individually has an explanatory gap that is filled by the other strands. In the case of the Turing principle, people may wonder how mental phenomena like consciousness or free will can be explained in physical terms. While Deutsch does not believe that consciousness depends on specific quantum-mechanical processes, he does maintain that the multiverse view of knowledge will help provide us with an understanding of consciousness one day. He also asserts that the multiverse is compatible with concepts like free will. Thus we are able to translate common statements into their physical representations; for example:

- "After careful thought I chose to do X, but I could have chosen otherwise." --> "After careful thought some copies of me, including the one speaking, chose to do X, but other copies of me chose otherwise."

- "It was the right decision." --> "Representations of the moral or aesthetic values that are reflected in my choice of option X are repeated much more widely in the multiverse than representations of rival values."

The final chapter (14) reiterates the idea that our knowledge is continuing to take on a comprehensible unified structure, and also that this structure is not being swallowed by fundamental physics. Each of the four strands is "monolithic" by itself, yet together they are not hierarchical, and they help to explain each other. At this point Deutsch introduces Frank Tipler's omega-point theory, which is hard to explain briefly but essentially suggests that at the end of the universe (the Big Crunch) there will be an infinite amount of knowledge created in a finite time by intelligent beings who are able to manipulate the gravitational oscillations over the whole of space, and who will then (thanks to virtual reality) experience subjectively infinite lives. [This is perhaps the most radically speculative part of the book.] Deutsch does not agree with Tipler that the intelligences at the omega point will be omniscient and omnipotent, but agrees that the creation of some kind of omega point is compatible with our current best knowledge of the fabric of reality. But it seems that the reason why Deutsch brings this up is to discuss morality -- how will our far-future descendants choose to behave? Is there an objective basis to "right" and "wrong"? Well, we know that we can choose to override our inborn (genetic) and socially conditioned behaviors for moral reasons, and that we give moral explanations for changing our preferences. So according to Deutsch, we can explain moral values as attributes of physical processes without deriving them from anything, if doing so contributes to our understanding of reality. In this view, which the author only proposes tentatively, we may regard moral concepts (e.g. "human rights") as existing objectively as a kind of "emergent usefulness" by relation of "emergent explanation". Yet even at the omega point, our descendants' knowledge will not be certain, and they will disagree with one another. Solved controversies will be replaced by ever more exciting and fundamental ones... this seems to be what Deutsch has in mind for our destiny.

***

"The Bayesian revolution in the sciences is currently replacing Karl Popper’s philosophy of falsificationism. If your theory makes a definite prediction that P(X|A) ≈ 1 then observing ~X strongly falsifies A, whereas observing X does not definitely confirm the theory because there might be some other condition B such that P(X|B) ≈ 1. It is a Bayesian rule that falsification is much stronger than confirmation – but falsification is still probabilistic in nature, and Popper’s idea that there is no such thing as confirmation is incorrect."Whereas David Deutsch makes no mention of Bayes in his book, Sean Carroll does in "The Big Picture", which also attempts to provide an integrative (and somewhat "emergentist") world-view on cosmology, epistemology, life, morality and so on. But putting Bayes aside, it seems that Deutsch shares a predilection for "Gödel, Escher, Bach" (written by Douglas Hofstadter) with Yudkowsky, who has also been inspired by GEB. Deutsch mentions the book in his bibliography, and a few other references (such as the dialogue chapter, the inclusion of Cantor, Gödel, and Turing, the discussion of self-replicators etc.) are subtle signs throughout. The basic map-territory distinction is a stance shared by Deutsch and Yudkowsky. The idea that we could in the future have human minds running on artificial computer hardware (e.g. "uploads" or "emulations") is one that Deutsch shares with Robin Hanson. Overall, it seems like Deutsch would fit in nicely in the Rationality community.

There are some neat things I learned from this book; one of them is that to defeat an idea like solipsism, one should take it seriously as an explanation of the world, and watch how it self-destructs and forces one into needlessly complicated assumptions. In addition, the book is written in clear language, albeit somewhat snarky at some points. A very useful feature of the book is the "terminology" and "summary" sections at the end of each chapter. Although the author jumps back-and-forth between the four strands, the later chapters at least build on the earlier ones. Overall, this is a book I would definitely recommend.

Comments

Post a Comment