Life Actually

Book Review:

Max Tegmark, "Life 3.0: Being Human in the Age of Artificial Intelligence", Penguin Books, 2018.

A great way to follow up on Yuval Noah Harari's Homo Deus is to look at a different perspective on our future as human beings. Whereas Homo Deus pessimistically forecasts the irrelevance of humankind after the proliferation of superintelligent algorithms, the book Life 3.0 by MIT cosmologist Max Tegmark explores a range of possible futures, from utopias to dystopias and the ground in between. These "AI aftermath scenarios" are also summarized on the book's companion website from the Future of Life Institute (where Tegmark is one of the founders). But the book is about much more than that -- it also attempts to address questions about the nature of intelligence, consciousness, goals, and the end of the universe. And while Life 3.0 may be speculative at times, the author is at least honest about it, to the point of including a table with the epistemic status of each chapter (on page 47 of the edition I read). Rarely do authors offer such courtesy.

The book begins with "The Tale of the Omega Team", which is a fictional account of one possible future with AI. The Omegas secretly build a general artificial intelligence called Prometheus, which they use to make an enormous amount of money through Amazon MTurk and their own animated entertainment company. Eventually Prometheus is capable of inventing new technologies and influencing politics such that traditional power structures erode (thanks to an agenda of democracy, tax cuts, government social service cuts, military spending cuts, free trade, open borders, and socially responsible companies). Through careful and strategically optimal planning, the Omegas are able to take over the world. But their ultimate goal is left blank. The purpose of this prelude is to get the reader to start thinking: what kind of future do you want to create?

The book ends with a different tale: that of the Future of Life Institute (FLI) team. In this epilogue, Max Tegmark discusses his own efforts at working toward a good future, which include being a co-founder of FLI and organizing the 2015 Puerto Rico and 2017 Asilomar conferences about AI safety. These conferences had an impressive list of attendees, including the likes of Jaan Tallinn, Stuart Russell, Anders Sandberg, Stuart Armstrong, Luke Muehlhauser, Toby Ord, Joscha Bach, Demis Hassabis, Robin Hanson, Elon Musk, Eliezer Yudkowsky, Sam Harris, Nick Bostrom, Vernor Vinge, Ray Kurzweil, Daniel Kahneman, Eric Drexler, David Chalmers, William MacAskill, Eric Brynjolfsson, and Larry Page, among others. You may recognize some of these names if you are familiar with Silicon Valley, the rationality community, Effective Altruism, or transhumanism. (And if not, legend has it that there are "engines" for searching the web.) In any case, the book concludes with a list of the Asilomar AI Principles and a note about "mindful optimism", which is "the expectation that good things will happen if you plan carefully and work hard for them" (p. 333). We need existential hope, and therefore positive visions for the future. That is why Tegmark wants his readers to join "the most important conversation of our time".

Between the prelude and epilogue are eight chapters, divided into two parts. The first part (Ch. 1-6) deals with the history of intelligence, from start to finish. The first three chapters, which discuss key concepts, the fundamentals of intelligence, and near-future challenges (e.g. jobs and AI weapons) are generally not very speculative. The next three chapters are speculative, exploring the potential superintelligence scenarios, the aftermath of the subsequent 10,000 years, and the next billion years and beyond. Part two of the book (Ch. 7&8) is more about the history of meaning, including goal-oriented behavior and natural and artificial consciousness. The chapter on goals is not very speculative, but the one on consciousness is, given the thorny topic. Overall, the book contains some very interesting content, which I shall summarize.

***

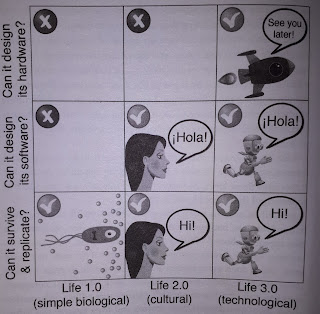

Chapter 1 explains the book's title, "Life 3.0". Basically, life can evolve according to three stages of sophistication: there is Life 1.0 in which simple biological life-forms specified by DNA are able to survive and replicate but unable to redesign their hardware or software. Bacteria evolve slowly over many generations, through trial-and-error. In contrast, Life 2.0 allows for software updates during an organism's lifetime, for example through cultural learning. This is why humans are smarter and more flexible than Life 1.0. However, we are not fully masters of our own destiny yet, because we are limited in the degree to which we can redesign our hardware -- that is what the technological stage, Life 3.0, will be about when it arrives. We may be able to launch Life 3.0 this century thanks to Artificial Intelligence.

|

| Figure 1.1 on page 26 |

If Life 3.0 is within our grasp, then what future should we aim for, and how can we accomplish it? This question is controversial, with different sides having different perspectives. There are "Luddites" who oppose AI and are convinced of a bad outcome, but Tegmark mostly ignores this group. Instead he focuses on the "Digital Utopians" who wholeheartedly welcome Life 3.0 (e.g. Ray Kurzweil and Larry Page), the "Techno-Skeptics" who do not believe that we should worry about superhuman AGI for the foreseeable future because it won't happen (e.g. Andrew Ng and Rodney Brooks), and the "Beneficial-AI Movement" which believes that AGI is likely but that a good outcome is not guaranteed without hard work (e.g. Stuart Russell and Nick Bostrom). Needless to say, the author himself belongs to this third group.

|

| Figure 1.2 on page 31 |

The author also addresses several "pseudo-controversies" that arise from misconceptions. Common myths about superintelligent AI include the following:

- Superintelligence by 2100 is inevitable/impossible

- Only Luddites worry about AI

- The worry is that AI will turn evil or conscious

- Robots are the main concern

- AI cannot control humans

- Machines cannot have goals

- The worry is that superintelligence is just years away

"If you feel threatened by a machine whose goals are misaligned with yours, then it's precisely its goals in this narrow sense that trouble you, not whether the machine is conscious and experiences a sense of purpose" (p. 44).Additionally, there are misconceptions that occur because people do not use the same definitions of certain words, especially "life", "intelligence" and "consciousness". Tegmark prefers to define these terms very broadly, so as to avoid anthropocentric bias. Thus, life is "a process that can retain its complexity and replicate". Intelligence is "the ability to accomplish complex goals". Consciousness is "subjective experience". These definitions enable us to move beyond the sorts of biological, intelligent, and conscious species that exist so far. This will be important when exploring the future of intelligence.

Chapter 2 explains how intelligence began billions of years ago in the form of seemingly-dumb matter. Tegmark provides a naturalistic explanation of intelligence, which is fundamentally about memory, computation, and learning. To be fair, the author never claims that rocks are intelligent, but it is still surprisingly simple to create an object that "remembers"; all you need is something that can be in many different stable states (such as a hilly surface with sixteen different valleys in it plus a ball). You can then use the device to store bits (i.e. binary digits) of information. The physical substrate of the information does not matter -- you can use electric charges, radio waves, laser pulses, and so on; hence memory is substrate-independent. The same goes for computation and learning. Computation is simply the transformation of one memory state into another by implementing a function. While functions range from simple (like squaring a number) to complex (like winning a game of chess against a world champion), it is an amazing fact that any computable function can be broken down into NAND gates, which output C = 0 for the input A = B = 1 and otherwise C = 1. When a clump of matter can rearrange itself to get better at computation, this is called learning. But there is nothing mysterious about learning: Darwinian evolution is an example of learning on the species-level. Even more simply, a soft clay surface can "learn" a number like pi if you repeatedly place a ball at position x = 3.14159. In sum, any chunk of matter can be the substrate for memory, computation and learning.

(Those who find the implications of these computer science principles interesting might also like Algorithms to Live By.)

The important takeaway here is that machines might become as intelligent as humans sometime this century. As we have seen, intelligent matter need not be biological. Already today, machines are good at chess, proving math theorems, trading stocks, image captioning, driving, speech transcription, and cancer diagnosis. How long will it take until machines can out-compete us at writing books, programming, doing science, art, and designing AI? Since the mid-1900s, computer memory (measured in bytes) and computing power (measured in floating-point operations per second) have gotten twice as cheap roughly every couple of years. New technologies (like quantum computers) can help us maintain this trend of persistent capability doubling. Yet before AI reaches human level across all tasks, we will face a number of near-future questions.

Chapter 3 looks at questions like: "Should we start a military AI arms race? How should laws handle autonomous systems? How to make AI more robust and less hackable? Which jobs will get automated first? What careers should kids pursue? How to make a low-employment society flourish?" The march of technology has yielded breakthroughs in deep reinforcement learning (see AlphaGo), natural language processing, and other areas of artificial intelligence. It is becoming harder to argue that AI completely lacks intuition, creativity or strategy. Therefore, in the near-term, we have to deal with issues like making AI systems more robust against hacks or malfunctions, making "smart" weapons less prone to killing innocent civilians, updating our legal systems to be more fair and efficient, and growing our prosperity without leaving people lacking income or purpose.

If we are going to put AI in charge of our power grid, stock market, or nuclear weapons, then it better be robust in the sense of verification, validation, security, and control. In other words, we need to ensure that the software works as intended (verification), that we build the right system specifications given our goals (validation), that human operators can monitor and if necessary change the system (control), and that we are protected from deliberate malfeasance (security). The legal process can be improved and automated using "robojudges", which could be faster and less biased than human judges, but making them transparent would be a challenge. Further legal controversies include the regulation of AI development, the protection of privacy, and granting rights to machines. In terms of AI-based weapons, it is clearly important for these systems to be robust. Perhaps more importantly, we need to avoid an arms race that would make AI weapons ubiquitous and therefore easier for dictators, warlords and terrorists to acquire and use for killing people. A ban on lethal autonomous weapon systems may gather support from superpowers because, similar to biological and chemical weapons, small rogue states and non-state actors stand to gain most from an arms race. On the other hand, we may still face the risk of devastation from cyberwarfare. Looking at the job market, machines are increasingly replacing humans. This is not inherently a bad thing, because automation and AI have the potential to create new and better products and services while giving people more leisure time to enjoy democracy, art and games. However, without wealth-sharing, it is likely that inequality will continue to rise -- Tegmark here cites the arguments of Erik Brynjolfsson and Andrew McAfee. In the long-term, we may have to face the consequences of human-level artificial general intelligence.

Chapter 4 examines how an "intelligence explosion" (or "Singularity") could happen, whereby recursive self-improvement rapidly leads to general intelligence far beyond human level. The British mathematician I.J. Good coined the term back in 1965 when he wrote:

"Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an 'intelligence explosion,' and the intelligence of man would be left far behind" (p. 4 in Life 3.0).The real danger is not some Hollywood Terminator story, but a group such as the Omegas or a government using superintelligence to take over the world and implement a totalitarian regime, or alternatively losing control over the AI so that the AI itself then takes over and implements its own goals (whatever those may be). But how would an AI break free? Well, it could sweet-talk its way out (i.e. psychological manipulation), or hack its way out, or recruit the help of unwitting outsiders. Most likely, its superior intelligence and understanding of computer security would enable it to use methods that we humans cannot currently imagine.

A fast takeoff with a unipolar outcome is not the only possible scenario. We might also experience a slower intelligence explosion taking years or decades, in which case a multipolar outcome (wherein many independent entities share a balance of power) is more plausible. In this scenario, technology would affect existing hierarchies by enhancing long-distance coordination, although the effects seem to be mixed. For example, surveillance could increase top-down power, but cryptography and free education could empower individuals. Another possibility is the creation of cyborgs (human-machine hybrids) and uploads (also known as brain emulations). Tegmark mentions Robin Hanson's The Age of Em, which explores a hypothetical future dominated by the em economy. Incidentally, though Tegmark does not mention this explicitly, Robin Hanson is known as a believer in a slow takeoff scenario, and famously debated this point with Eliezer Yudkowsky in the so-called AI-Foom Debate. Max Tegmark himself seems agnostic about the speed of takeoff, but he does write that brain emulation is unlikely to be the quickest route to superintelligence (because there may be simpler ways, just as we don't build planes that flap their wings like birds). Of course, we have no idea what will happen once we build AGI, but we can start by asking, what should happen?

In Chapter 5 we finally get the speculative aftermath scenarios, which pertain primarily to the questions "How is AI controlled?" and "How will humans be treated?". Tegmark lists twelve possible scenarios and invites the reader to consider which ones are preferable. These scenarios vary in terms of whether superintelligence exists, whether humans exist, whether humans are in control, whether humans are safe, whether humans are happy, and whether consciousness exists. The answer to all of these questions is "No" in the case of the Self-destruction scenario, whereby we drive ourselves extinct before creating superintelligence, possibly due to nuclear war, doomsday devices like cobalt-salted nukes, biotech, or relatively dumb AI-based assassination drones. Humanity might also die out but get replaced by superintelligent AI, either because the AIs are our Descendants (we view them as our worthy "mind children") or our Conquerors (we get eliminated by a method we don't see coming, because the AI decides we are a threat, nuisance, or waste of resources).

In the scenarios where humans still exist, we could decide to prevent superintelligence by building a Gatekeeper AI, which has the sole purpose of preventing technological progress from creating another superintelligence. This Gatekeeper scenario could be combined with an Egalitarian utopia scenario, whereby humans, helper robots, cyborgs and uploads coexist peacefully thanks to the abolition of property rights and a guaranteed income. In this case there would be no incentives to build a superintelligence, according to the author. Alternatively, superintelligence could be prevented by the 1984 scenario (an Orwellian surveillance state bans AI research) or the Reversion scenario (essentially reverting to an Amish-inspired state of primitive technology and forgetting AI).

The remaining five scenarios feature humans and superintelligence coexisting. The humans may feel unhappy in the Zookeeper scenario, in which an omnipotent AI keeps some humans around like zoo animals, either for its own entertainment/curiosity or because it has decided that this is the best way of keeping humans happy and safe. The reverse of this scenario is if humans force a confined AI to do our bidding, such as developing unimaginable technology and wealth -- this AI is called an Enslaved god, and the obvious issue is that its human controllers can use it for good or for bad (and perhaps it might also be unethical if the AI has conscious experiences). A different scenario allows for humans, cyborgs, uploads, and superintelligences to coexist peacefully thanks to property rights; this is the Libertarian utopia scenario, and contrasts economically with the Egalitarian utopia. Finally, we might build a "friendly AI" that acts as a Benevolent dictator (a single benevolent superintelligence that runs the world according to strict rules in order to maximize happiness and eliminate suffering) or a Protector god (an omniscient and omnipotent AI that looks out for human happiness but only intervenes in ways that let us preserve our feeling of being in control). The Protector god might even hide its existence from us. In general, all of these scenarios have some downsides and there is no consensus on which is the best -- another reason to have the conversation!

Chapter 6 looks even further afield by exploring our cosmic endowment billions of years from now. Here, the laws of physics play a bigger role. It is likely that the universe will continue to exist for tens of billions of years, but life as we know it might be destroyed before then -- perhaps due to catastrophic climate change, a major asteroid impact, a supervolcanic eruption, gamma-ray bursts, the Sun becoming too hot and/or swallowing the Earth, or even a collision with the Andromeda galaxy. Hopefully, technology could help us solve these problems. If we do survive, our potential is limited only by the ultimate limits of the cosmos, which could end in a number of different ways: a "Big Chill" (the universe keeps expanding until cold and dead), a "Big Crunch" (the universe collapses back into one point), a "Big Rip" (the universe expands at a much faster rate such that everything is torn apart), a "Big Snap" (the fabric of space itself snaps as it gets stretched), or "Death Bubbles" (parts of space change into a lethal phase and expand at the speed of light). We don't know the ultimate fate of the universe, but it will probably last another 10 to 100 billion years into the future.

If we manage to carefully plan and control an intelligence explosion, life has the potential to flourish for many billions of years beyond the wildest dreams of our ancestors. Superintelligence will allow life to "explode" onto the cosmic arena and expand near the speed of light, settling galaxies by transmitting people in digital form. Technology will plateau at a level limited only by the laws of physics, which is a level vastly greater than today's technology. For example, superintelligent life would be able to make much more efficient use of its resources by building Dyson Spheres (capturing the energy of a star or quasar by entirely surrounding it with a biosphere), building power plants based on nuclear fusion, harvesting energy from evaporating or spinning black holes, or building a generator that uses the sphaleron process -- the latter would be a billion times more efficient than diesel engines in terms of converting matter into energy! Moreover, we may be able to rearrange atoms to convert a given amount of matter into any other desired form of matter, including intelligent entities. We could build better computers, with orders of magnitude more information storage and computing speed. If we run out of resources on Earth, we could settle the cosmos to grow the amount of matter available to us by over a trillion times. How far and how fast we could go depends on the speed of light and the rate of cosmic expansion, which in turn depends on the nature of dark energy. Dark energy is a double-edged sword: it may limit the number of galaxies we can settle and it could fragment our cosmic civilization into isolated clusters; on the other hand, it could also protect us from distant expanding death bubbles or hostile civilizations. The following figure illustrates a universe with multiple independent civilizations undertaking space colonization.

|

| Figure 6.10 on page 239 |

To some extent, a civilization can maintain communication, coordination, trade and control within itself through cosmic engineering projects such as wormholes (assuming they are possible) and/or laser-sail spacecraft with "seed probes". However, since the speed of light still places a limit on this, other methods of control may be necessary -- for instance, a central hub might control a hierarchy of "nodes" by using rewards (e.g. information) or threats (e.g. a local guard AI programmed to destroy the node with a supernova unless it obeys the rules). But what happens when different civilizations collide? Well, they could go to war, but it is more likely that they would cooperate to share information or assimilate each other. Ultimately, however, it is possible that we are alone in the universe; the author explains that the typical distance between civilizations may be so great that we can never make contact with aliens. If we are alone, then perhaps we have a moral responsibility to ensure that life flourishes and does not go extinct -- otherwise the universe will be meaningless.

Speaking of meaning, Chapter 7 is about one of the most important issues in AI safety: goals. It would be a bad idea to give control to machines that do not share our goals -- so it is important for us to know what our ultimate goals are, and how to give them to AI. Just like memory, computation and learning (explained in Ch. 2), goals also have a physical basis in the sense that goal-oriented behavior (i.e. behavior more easily explained via effect than via cause) arises from a bunch of particles bouncing around; this is possible because the laws of physics in general try to optimize for some quantity, either minimizing or maximizing it. For example, through the laws of thermodynamics, nature strives to maximize entropy (or global "messiness"). Through Fermat's principle, a ray of light will bend its trajectory when it enters water, such that it minimizes the travel time to its destination. Interestingly, the "goal" of thermodynamics is aided by biological life, which increases overall dissipation but reduces local entropy through complex, replicating living systems. Life in turn has its own goal, which is replication (through Darwinian evolution), yet this does not mean that advanced life-forms exclusively optimize for replication. Evolution has programmed organisms to use heuristic rules-of-thumb, for example "when hungry eat things which taste good", but today's human society is a very different environment from the one evolution optimized our heuristics for -- hence why we face an obesity epidemic and use birth control rather than maximize baby-making.

Human psychology has many examples of the brain rebelling against its genes; celibacy, suicide, and artificial sweeteners are just some of them. According to Tegmark, our feelings (e.g. hunger, thirst, pain, lust, compassion) are the ultimate authority guiding our pursuit of goals. And nowadays we are increasingly outsourcing our goals to intelligent machines, which makes the issue of goal-alignment relevant. In a way, even relatively simple things like clocks, dishwashers and highways can be said to exhibit goal-oriented design, because we most easily explain their existence in terms of having a purpose (which is to serve our goals of keeping time, cleaning dishes, and so on). Yet as our devices get smarter and have more complex goals (e.g. self-driving cars), the importance of Friendly AI only grows. There are three sub-problems in goal alignment, all of which are currently unsolved: making AI learn our goals, adopt our goals, and retain our goals.

|

| Figure 7.2 on page 264 |

If you think that an AI that learns our goals will remain friendly forever, the figure above illustrates the key tension between the subgoal of curiosity and the ultimate goal of the AI. Any sufficiently ambitious ultimate goal will naturally lead to the subgoal of capability enhancement, which includes improving the AI's world model. Yet sometimes, a better world-model may lead the AI to discover new things that might reveal its old goals to be misguided or ill-defined, so it will change its goals. Additionally, the subgoals of self-preservation and resource acquisition may cause problems for humans, for example resisting shutdown attempts, or even rearranging the Solar System into a giant computer. There are other ethical issues as well: whose goals should we give to AI? Can we derive ethical principles from scratch? Is there a minimum set of ethical principles we can agree we want the future to satisfy? Should we strive to maximize the amount of consciousness in our universe, or something else, like algorithmic complexity? Such questions highlight the need to rekindle the age-old debates of philosophy.

Finally, in Chapter 8 the author dives into the problem of consciousness. The question of whether AIs could have subjective conscious experiences is relevant to ethical ideas like giving AIs rights and preventing them from suffering. It also matters for the Descendants and Conquerors scenarios (from Ch. 5) because if humans get replaced by AIs without consciousness, then the universe will turn into an unconscious zombie apocalypse, wherein there is nobody to give meaning to the cosmos. Another interesting problem is whether brain emulation will preserve consciousness; if it does not, then uploading could be considered a form of suicide. Therefore, the challenge before us is to predict which physical systems are conscious.

|

| Figure 8.1 on page 285 |

But we also face two harder problems: predicting qualia, and explaining why anything at all is conscious. In other words, how do physical properties (e.g. particle arrangements) determine what a conscious experience is like in terms of colors, sounds, tastes and smells, and why can clumps of matter be conscious? These two harder problems may seem beyond science, but if we make enough progress on the "easier" question (what physical properties distinguish conscious from unconscious systems?) then we can build on it to tackle the tougher questions. Although we do not fully understand consciousness, we have some clues. For example, neuroscience experiments tell us that there are behavioral correlates of consciousness -- we tend to be conscious of behaviors that involve unfamiliar situations, self-control and abstract reasoning, whereas we are often unconscious of things like breathing and blinking. There are also neural correlates of consciousness: thanks to technology such as fMRI, EEG, MEG, ECoG, ePhys and fluorescent voltage sensing, we can see which brain areas are responsible for conscious experiences, and it seems like consciousness mainly resides in the thalamus and rear part of the cortex (although the research is controversial). Interestingly, our consciousness also seems to live in the past, since it lags behind the outside world by about a quarter of a second.

If we want to speak about conscious machines, we need a general theory of consciousness. Such a theory would need to mathematically predict the results of consciousness experiments. Tegmark suggests a physics perspective, based on the physical correlates of consciousness, which can be generalized from brains to machines. As far as we can tell, consciousness seems to have properties that go above and beyond being a pattern of particles; it requires a particular kind of integrated and autonomous information processing. According to Tegmark, consciousness is "the way information feels when being processed in certain complex ways" (p. 301). Giulio Tononi has proposed a mathematically precise theory for this, which is called Integrated Information Theory (IIT). Basically, IIT uses a quantity ("integrated information") denoted by Phi which measures the inability to split a physical process into independent parts; it therefore represents how much different parts of a system know about each other. Of course, the implication here is that consciousness is also substrate-independent: it's the structure of the information processing that matters!

While there are many controversies of consciousness, a very interesting one is how AI consciousness would feel. It is likely that the space of possible AI experiences is much bigger than the space of all human experiences. It is also likely that an artificial consciousness will have millions of times more experiences per second than we do, because it can send signals at the speed of light... unless the AI is the size of a galaxy, in which case it may think only one thought every 100,000 years. Perhaps most controversially, Tegmark argues that any conscious agent, artificial or not, will feel like it has free will in the sense that it would know exactly why it made a particular choice (in terms of goals). This is because an entity cannot predict what its decision will be until it has finished running the computation, and the subjective experience of free will is simply how the computation feels from inside. Here Tegmark has a remarkably similar view to that of Eliezer Yudkowsky, who also believes that the deterministic laws of physics are compatible with goal-oriented behavior. Multiple other authors share this view, including Gary Drescher, Sean Carroll, Simon Blackburn, and David Deutsch. I am sympathetic to this view, and I particularly like this quote from Tegmark:

"Some people tell me that they find causality degrading, [...] what alternatives would they prefer? Don't they want it to be their own thought processes (the computations performed by their brains) that make their decisions?" (p. 312)Tegmark concludes the chapter by considering how positive experiences (including happiness, goodness, beauty, meaning and purpose) depend on the existence of consciousness, and how a universe without consciousness would be an astronomical waste of space. With AI, humans will not be exceptional anymore, as we will not be the smartest around. But we can still be proud of experiencing qualia.

***

Life 3.0 is a wonderful book for being so well-written and making such complicated topics accessible to a broad audience. The numerous figures and tables throughout help to engage the reader, and the "bottom line" sections that summarize each chapter in bullet points serve a useful function for readers who wish to refresh their memories. But what I also like is Max Tegmark's transparent and conversational approach; like I said before, he notifies the reader which parts of the book are speculative and which are less so. Moreover, he also encourages his audience to participate in the conversation about AI and has even set up a survey on the FLI website.

When I checked the website (ageofai.org), I noticed an interesting discrepancy: while the majority of people (between two-thirds and three-quarters) said that they want there to be superintelligence, the most popular of the 12 aftermath scenarios was the Egalitarian utopia, which according to the book does not contain superintelligence! Perhaps the website failed to make this clear, or perhaps people are aware of this but voted for it anyway in the hope that the Egalitarian utopia would eventually develop friendly AI? In any case, the next most popular options were Libertarian utopia and Protector god. One wonders whether people's political biases explain the top two preferences...

Another plus point for Life 3.0 is that the author succeeds in humanizing himself by telling his own story and about his role in the beneficial-AI movement, even including photographs of himself and his wife. The reader may, as I did, get the impression that Tegmark is a sincere and well-intentioned person. Indeed, he does not really denigrate his opponents' views either. This is a book that I would recommend to everyone, and so I would give it 5/5 stars. If there is one weakness, then it is probably the lack of original content beyond the aftermath scenarios and FLI tale. Most if not all the arguments relating to unemployment, intelligence explosion, and goals etc. have been made before by Bostrom, Yudkowsky, Brynjolfsson & McAfee, and others. The physics discussed in Chapter 6 is probably not too far removed from standard cosmological theories plus a sprinkle of inspiration from sci-fi novels. That being said, the presentation of the content is unique, and Tegmark has a talent for explaining things. Given that AI safety is still less mainstream than many other non-fiction topics, good presentation is extra important.

For those who find this topic fascinating, check out my own AI safety page here. You may also be interested in the projects of Effective Altruism and an understanding of rationality, which plays a role in AI-related research.

If you start reading more about doom and gloom scenarios, just keep in mind Tegmark's word about mindful optimism. If the fact that there are people who dedicate their lives to preventing human extinction doesn't warm your heart, I don't know what will.

Comments

Post a Comment